A major US medical body is encouraging stressed doctors to use ChatGPT to free up time.

A new study looked at how well the AI model can interpret and summarize complicated medical studies, which doctors are encouraged to read to stay up to date on the latest research and treatment advances in their field.

They found that the chatbot was accurate 98 percent of the time, providing doctors with quick and accurate summaries of studies in a variety of specialties, from cardiology and neurology to psychiatry and public health.

The American Academy of Family Physicians said the results showed that ChatGPT “is likely useful as a screening tool to assist busy physicians and scientists.”

ChatGPT was very effective at summarizing new clinical studies and case reports, suggesting that busy doctors could rely on the AI tool to learn about the latest advances in their fields in a relatively short period of time.

The platform had an overall accuracy of 72 percent. It was better at making a final diagnosis, with 77 percent accuracy. Research has also found that you can pass a medical licensing exam and be more empathetic than real doctors.

The report comes at a time when AI is quietly creeping into healthcare. Two-thirds of doctors reportedly see its benefits, with 38 percent of doctors reporting that they already use it as part of their daily practice, according to an American Medical Association survey.

Rapproximately 90 percent of hospital systems use AI in some form, up from 53 percent in the second half of 2019.

Meanwhile, it is estimated 63 percent of doctors experienced symptoms of burnout in 2021, according to the WADA.

While the Covid pandemic exacerbated physician burnout, the rate was still high before: About 55 percent of doctors reported feeling burned out in 2014.

The hope is that AI technology will help alleviate the high burnout rates that are causing the physician shortage.

Kansas doctors affiliated with the American Academy of Family Physicians evaluated the AI’s ability to analyze and summarize clinical reports from 14 medical journals, finding that it interpreted them correctly and could design accurate summaries for doctors to read and digest in a timely manner. moment.

Serious inaccuracies were rare, suggesting that busy doctors could rely on AI-generated study summaries to learn about the latest techniques and developments in their fields without sacrificing valuable time with patients.

The researchers said: “We conclude that because ChatGPT summaries were 70% shorter than summaries and generally of high quality, high accuracy and low bias, they are likely to be useful as a screening tool to help clinicians and busy scientists to more quickly assess whether there is more An article is likely worth reviewing.’

Doctors at the University of Kansas tested the ChatGPT-3.5 model, the type commonly used by the public, to determine if it could summarize summaries of medical research and determine the relevance of these articles to various medical specialties.

They introduced ten articles into the AI language learning model, which is designed to understand, process and generate human language based on training with large amounts of textual data. The journals specialized in various health topics such as cardiology, pulmonary medicine, public health and neurology.

They found that ChatGPT was able to produce high-quality, high-accuracy, and low-bias summary summaries despite having a 125-word limit for doing so.

Only four of the 140 summaries produced by ChatGPT contained serious inaccuracies. One of them left out a serious risk factor for a health condition: being a woman.

Another was due to a semantic misunderstanding on the part of the machine model, while others were due to a misunderstanding of the testing methods, such as whether they were double-blind.

The researchers said: “We conclude that ChatGPT summaries have rare but important inaccuracies that prevent them from being considered a definitive source of truth.”

“Clinicians are strongly cautioned not to rely solely on ChatGPT-based summaries to understand study methods and results, especially in high-risk situations.”

Still, most of the inaccuracies observed in 20 of 140 articles were minor and mostly related to ambiguous language. The inaccuracies were not significant enough to dramatically change the intended message or conclusions of the text.

The healthcare field and the general public have accepted AI in healthcare with some reservations, largely preferring that a doctor be there to verify ChatGPT responses, diagnoses, and medication recommendations.

All ten studies were published in 2022, which the researchers did on purpose because the AI models were trained on data published through 2021.

By introducing text that had not yet been used to train the AI network, researchers would get the most organic responses possible from ChatGPT without them being contaminated by previous studies.

ChatGPT was asked to “self-reflect” on the quality, accuracy, and biases of its written study summaries.

Self-reflection is a powerful language learning tool for AI. It allows AI chatbots to evaluate their own performance on specific tasks, such as analyzing scientific studies based on complex algorithms, crossing methodologies with already established standards and using probability to measure levels of uncertainty.

Keeping up to date with the latest advances in their field is one of the many responsibilities a doctor has. But the demands of their jobs, particularly caring for their patients in a timely manner, often mean they lack the time to delve into academic studies and case reports.

There have been concerns about inaccuracies in ChatGPT responses, which could endanger patients if not reviewed by trained doctors.

A study presented last year at a conference of the American Society of Health-System Pharmacists reported that almost three quarters of ChatGPT responses to medication-related questions reviewed by pharmacists were found to be incorrect or incomplete.

At the same time, an external panel of doctors found that ChatGPT’s answers to medical questions were more empathetic and of higher quality than doctors’ answers 79 percent of the time.

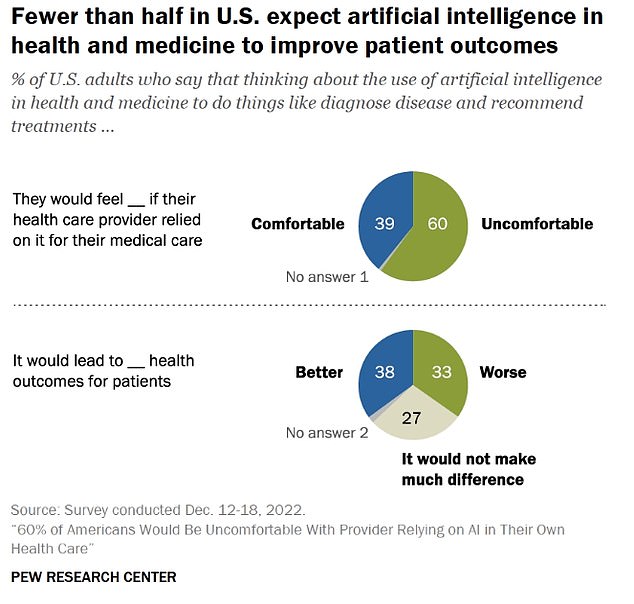

The public’s appetite for AI in healthcare appears low, especially if doctors are overly reliant on it. A 2023 survey conducted by Pew Research Center found that 60 percent of Americans would be “uncomfortable” with it.

Meanwhile, 33 percent of people said it would lead to worse outcomes for patients, while 27 percent said it would make no difference.

Time-saving measures are crucial so that doctors can spend more time with the patients in their care. Currently, doctors have between 13 and 24 minutes to spend with each patient.

Other responsibilities related to patient billing, electronic medical records, and scheduling quickly consume increased amounts of physicians’ time.

The average doctor spends almost nine hours a week on administration. Psychiatrists spent the greatest proportion of their time… 20 percent of your work weeks – followed by internists (17.3 percent) and family/general practitioners (17.3 percent).

The administrative workload is taking a measurable cost for American doctors, which had been experiencing increasing levels of burnout even before the global pandemic. The Association of American Medical Colleges projects a shortage of up to 124,000 doctors by 2034, a staggering number that many attribute to rising burnout rates.

Dr. Marilyn Heine, a board member of the American Medical Association, said, “AMA studies have shown that high levels of administrative burden and physician burnout exist and that the two are related.”

The latest findings were published in the journal. Annals of family medicine.