Calling your GP and making an appointment on the same day may seem like the minimum requirement for a health service to work.

But waiting a month or more to see a doctor is increasingly common, a new analysis shows. One in 20 are forced to wait at least four weeks to see their GP in England. The rate is approaching one in 10 in the worst affected areas of the country, which include Gloucestershire and Derbyshire. Some areas, such as the Vale of York, have seen an 80 per cent increase in four-week wait times in just one year.

Here, Mail+ has compiled a wealth of NHS data to reveal how your GP is faring in the national battle to see a doctor.

Your browser does not support iframes.

The guide will show you how your GP practice ranks on a wide range of appointment access data, including which practices are best for same-day appointments and how many other patients you are competing with to avoid queues. All data is correct as of November.

To create this comparison, we looked at the number of full-time equivalent (FTE) GPs across more than 6,000 practices in England and compared this to the number of registered patients.

Comparing the number of FTE GPs provides a more accurate description of the situation in your practice, given the number of doctors now working part-time.

Health chiefs say a GP-to-patient ratio above 1,800 could be unsafe, as GPs risk rushing through appointments or burning out due to patient loads.

This, in turn, can increase the risk of your patients missing early signs of serious illness, with potentially devastating consequences.

Our analysis of GP data found that more than half (52 per cent) of consultations were above the threshold of 1,800 patients per doctor.

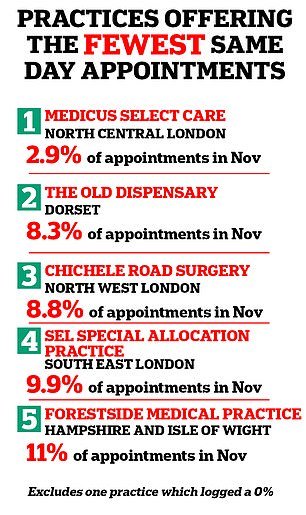

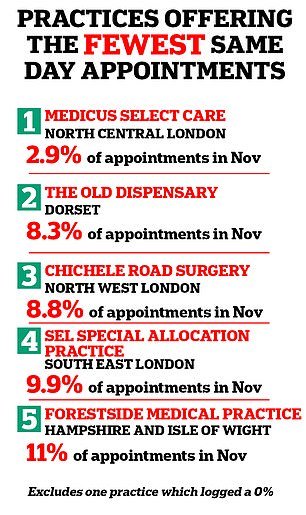

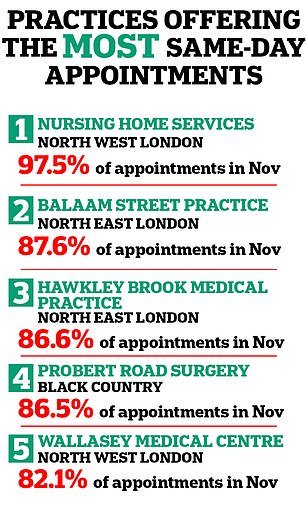

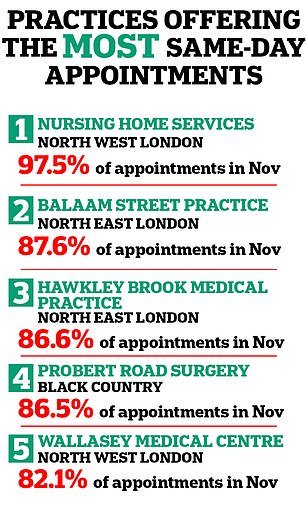

NHS data shows that access to same-day appointments varies hugely across the country.

For example, Chartwell Green Surgery in Southampton was the worst offender, with its 3.8 FTE GPs recorded as unable to provide same-day appointments in November.

Medicus Select Care in Enfield, north London, also struggled, with just 2.9 per cent of its appointments taking place on the same day in November last year.

Patients struggling to get an appointment with a GP will know to speak to practice receptionists in an attempt to book a place.

Our GP audit found that GPs were outnumbered by administrative staff, such as receptionists, by a ratio of 20 to one in some practices.

Medicus Select Care in London offers the fewest same day appointments in England

Practices in England offering the most same-day appointments

Matrix Medical Practice in Chatham, Kent, had almost 24 FTE administrative staff on its books compared to its single FTE GP.

High rates were also seen at JS Medical Practice in north-central London (14 admin staff per FTE GP) and at East Lynne Medical Center in Clacton-on-Sea in Essex (12.8 admin staff per FTE GP). (FTE header).

Only 452 GP practices in England (7 per cent of the total) had a one-to-one ratio of administrative staff to GPs or employed more doctors than receptionists.

We also measure what may be one of the most critical indicators of a GP’s practice performance: patient satisfaction.

In its annual survey, the NHS asked 759,000 primary care patients about their experience with their GP between January and April last year.

A Medicus Select Care branch in the London borough of Islington received the lowest overall rating in England, with just 11 per cent of patients rating it ‘good’.

This was followed by Green Porch Medical Partnership in Sittingbourne, Kent (17 per cent), and Compass Medical Practice, a specialist primary care service treating people who have been removed from other GP patient lists in the Lancashire region ( 19 percent).

In total, only nine primary care services in England achieved a perfect score of 100 per cent.

While overall 71 per cent of patients rated their GP survey as good, this was the lowest proportion since the survey began in 2017, when 85 per cent rated their GP highly. .

The decline in patient satisfaction comes as GP salaries continue to rise.

Family doctors, who typically work three days a week, earn six-figure salaries on average.

The struggle to access GP appointments is a complex issue, with doctors themselves reporting feeling overwhelmed by patient demand.

Under the guidelines, GPs are told to make no more than 25 appointments a day to ensure “safe care”. But some doctors reportedly have to see almost 60 each workday.

Nationally, the doctor-to-patient ratio is also now one to every 2,292, up almost a fifth from 2015.

The result is that millions of patients rush to appointments, which critics have described as being treated like “goods on a factory conveyor belt.”

The struggle to access timely GP appointments also has knock-on effects on other aspects of NHS care.

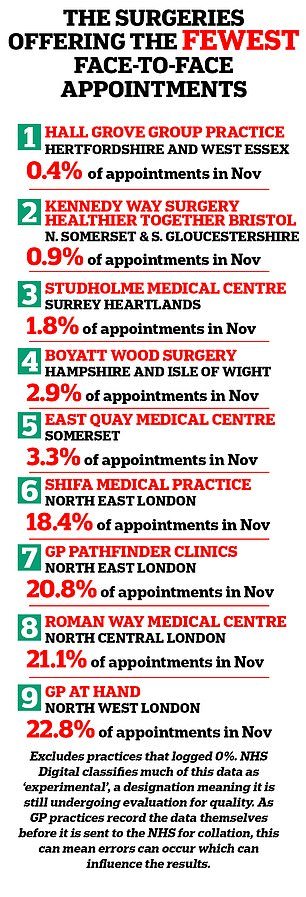

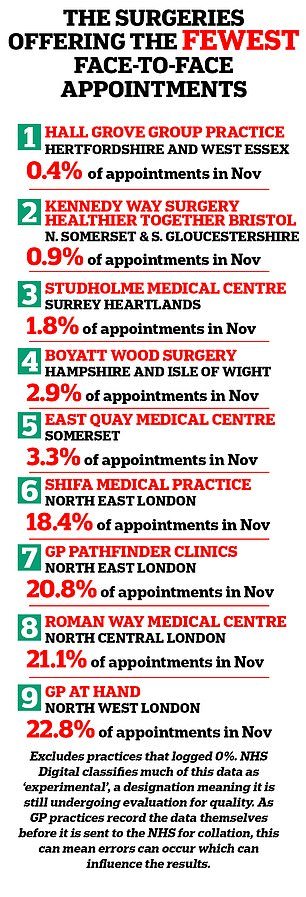

GP surgeries in England offering fewer face-to-face appointments

GP surgeries in England where 100% of appointments are in person

The GP crisis has been partly blamed for problems in A&E, as desperate patients seek help for problems they have not been able to resolve at a regular doctor’s appointment.

Despite the pressures they face in primary care, ministers have quietly abandoned a promise to recruit 6,000 more GPs, a major part of Boris Johnson’s election-winning manifesto.

In November last year, there were 27,483 fully qualified FTE GPs working in England.

While this is a year-on-year increase of 0.3 percent, the total is 400 fewer GPs than recorded in November 2021.

Analysts have said they believe England needs a further 7,400 GPs to fill gaps in primary care and ensure patients have timely access to it.

But the situation could get even worse in the near future.

Many GPs currently working in the system are retiring in their 50s, moving abroad or leaving to work in the private sector due to increased demand and NHS red tape.

This exodus risks exacerbating the workload crisis, as remaining family doctors have to accept more and more appointments, risking burnout.

NHS Digital classifies much of the data used in our analysis as “experimental”, a designation that means it is still under quality assessment.

Additionally, as GPs record much of the data before sending it to the NHS for collection, this can mean errors occur which influence the results.