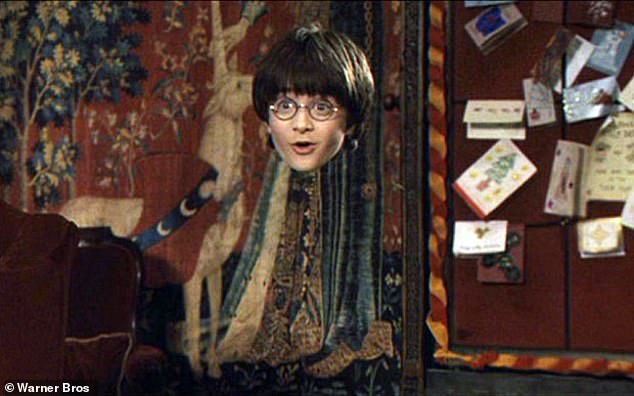

Most people who grew up reading the Harry Potter book series have dreamed of having their own invisibility cloak.

Now, that dream can become a reality, as scientists have developed a “mega invisibility shield.”

The £699 shield uses a precisely engineered set of lenses to deflect light, making objects behind it almost invisible.

And unlike Harry Potter’s cloak, the mega shield is large enough to hide multiple people standing side by side.

‘Do you want to experience the power of invisibility? We’ve got you covered,” Invisibility Shield Co. said.

The £699 shield uses a precisely engineered set of lenses to deflect light, making objects behind it almost invisible.

Most people who grew up reading the Harry Potter book series have dreamed of having their own invisibility cloak.

The mega shield is the brainchild of London-based Invisibility Shield Co, which came up with the initial idea in 2022.

Since then, the company has developed and tested several camouflage devices, before coming up with the latest design.

The Mega Shield measures 6 feet tall and 4 feet wide and is constructed of high-quality polycarbonate.

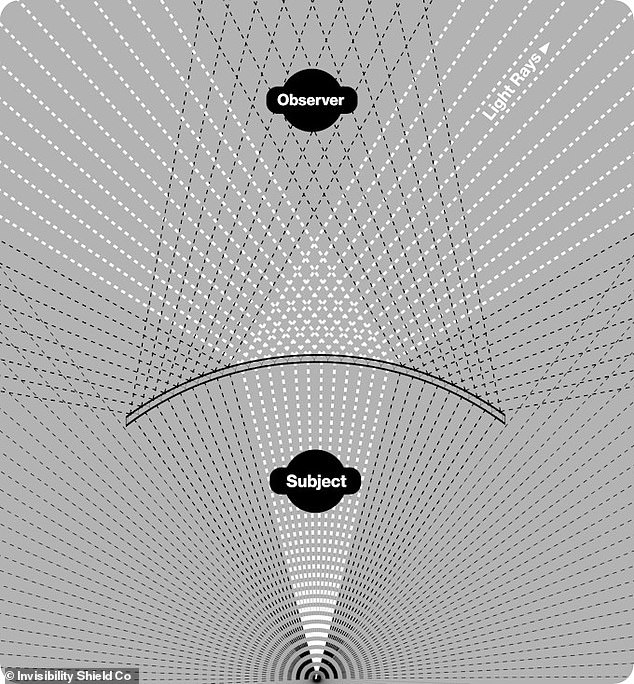

Using a precisely designed set of lenses, the light reflected from the person behind the shield is directed in the opposite direction to the person in front of it.

“The lenses in this set are oriented so that the vertical stripe of light reflected from the standing or crouching subject becomes diffuse as it extends horizontally as it passes the back of the shield,” Invisibility Shield Co explained on its page. from Kickstarter.

The mega shield is the brainchild of London-based Invisibility Shield Co, which came up with the initial idea in 2022. Since then, the company has developed and tested several cloaking devices, before coming up with the latest design.

According to the team, the shields are most effective against uniform backgrounds, such as grass, foliage, sand and sky.

“In contrast, the strip of light reflected from the bottom is much wider, so when it passes through the back of the shield, much more of it is refracted through the shield and toward the observer.

“From the observer’s perspective, this backlight is effectively diffused horizontally along the front face of the shield, over the area where the subject would normally be seen.”

According to the team, the shields are most effective against uniform backgrounds, such as grass, foliage, sand and sky.

‘Backgrounds with defined horizontal lines also work very well and can be natural elements such as the horizon or man-made elements such as walls, railings or painted lines,’ they added.

Using a precisely designed set of lenses, the light reflected from the person behind the shield is directed in the opposite direction to the person in front of it.

The company is now crowdfunding on Kickstarter, where you can purchase the Megashield for the early bird price of £699.

An earlier version of the shield was released in 2022, but it was only large enough for one person to squat.

What’s more, the users were forced to hold the shield themselves.

“Our new shields have been completely redesigned to be more stable when left freestanding, easy to hold, and easy to take wherever you want to go,” Invisibility Shield Co. said.

“The new ergonomic handles make holding and carrying the shield much more comfortable, while keeping the body in the optimal position to remain concealed.”

The company is now crowdfunding Kick starterwhere you can buy the Megashield for the early bird price of £699.