Table of Contents

In his recent This is Money podcast episode ‘Will the Budget Cut Taxes?’, he talked about how to avoid scams and highlighted the importance of only downloading apps from trusted sources like Google Play.

I just want to draw your attention that there are also scams on these platforms.

I have a drone made by DJI and recently traveled to Malta. Before my trip I wanted to make sure the drone was updated and then I saw that the app I was using to control the drone needed an update.

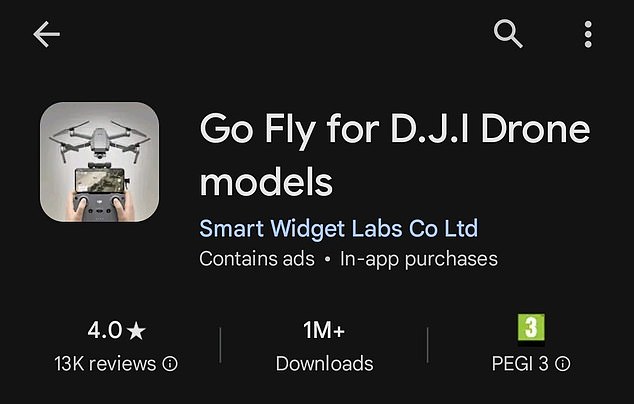

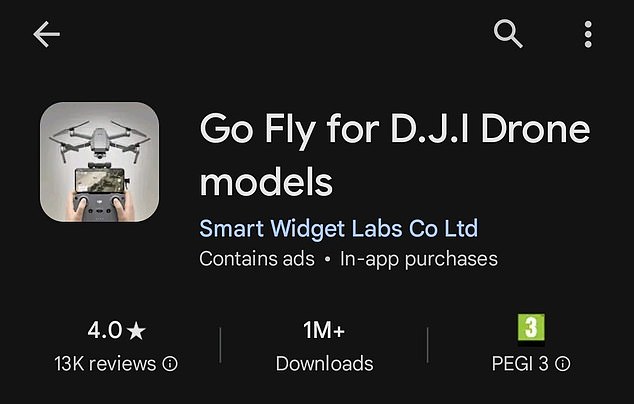

I searched Google Play and found an app called “Go Fly for DJI drone models”. It looked like a genuine DJI app.

Expensive hobby: Due to the high cost of drones, the software needed to fly them is usually free for users

After installing it, it requested a subscription-based payment or a lifetime app purchase.

It seemed so genuine that I almost paid, but I wanted to check it out with a friend who also owns the same drone.

He told me to go to the official website and download it from there, and it was completely free.

But unfortunately it looks like their scam doesn’t end here as I went back to Google Play and read all the one star reviews.

People who paid for this app allegedly received random attempts from unknown companies charging or wanting to charge debit and credit cards linked to Google Play. Adam Batki via email

Harvey Dorset from This Is Money responds: Given the plethora of one-star review warnings that you may see unknown charges on your card, it seems lucky that Adam chose to check with a friend before committing to a lifetime subscription.

The large number of reviews criticizing the app in question indicates that many drone owners have not been so lucky.

One user, Jason Roan, commented: ‘This is definitely a scam app and I can’t believe I fell for it. There is no way to unsubscribe and the email they tell you to send inquiries to isn’t even a real email.

‘Save your money, don’t use this app and go to the DJI site for a link to the real app. I hope this scam app burns to the ground.’

While another, Alec Keane, warned: “This is a scam app.” It won’t let you do anything other than buy a subscription which I assume is just a ploy to get your banking information.

Shady: Some critics of ‘Go Fly’ app say it doesn’t let you cancel your subscription

‘The actual DJI Fly app is on their website, so download it, it’s free and no subscription required. I wish I realized that sooner.

In fact, Batki, a personal trainer and swimming instructor from London, told This is Money: “Because I had extra time to think about this dodgy app on Google Play (most people think that everything on these app stores is legit, when it’s not) I didn’t pay for it and took my time to double and triple check it.’

“But let’s say if I wasn’t that organized and I had flown to Malta and wanted to fly the drone, I wouldn’t be able to do it until I had upgraded it etc. Most likely I would just pay.” “To the scammers because all I want is for my drone to be in the sky as soon as possible.”

App stores are nothing but unwieldy beasts. Every month, thousands of apps are added to the Google Play Store, and in November 2023 alone, 62,000 apps were added to the platform. Likewise, 38,000 applications were added to the Apple App Store during the same month.

Unsurprisingly, with such high volumes of content, apps posing as legitimate can and do leak onto the network. While the best advice is to stick to the official app stores, it seems that this is not a foolproof method.

In fact, being diligent when using these app stores could prevent you from inadvertently giving up both your money and your data.

When approached by This is Money, a Google spokesperson said: “Go Fly for DJI drone models has been removed from the Play Store.”

“All Android apps undergo rigorous security testing before appearing on Google Play, and Google Play Protect scans 125 billion apps daily to make sure everything stays in place.”

However, a few days later, the app was back up and running on the Google Play Store.

The app’s developer, Smart Widget Labs Co Ltd, based in Ho Chi Minh City, Vietnam, did not respond to a request for comment on Batki’s experience with the app.

This is Money also spoke to Laura Kankaala, threat intelligence lead at F-Secure, who explained how you can spot the signs of a fraudulent app.

Misleading reviews: Laura Kankaala warns that malicious apps can buy bulk reviews to boost their rating

How can you detect fraudulent or misleading apps in app stores?

The first thing, Kankaala said, is to check if the app developer is who you expected them to be.

“In legitimate app stores you can check who the developer of the app is,” he said. “For example, if you are downloading the Facebook app on your phone, the developer should be Meta Platforms, Inc. Check the developer’s details and what kind of other apps they have uploaded.”

If you suspect an app, Kankaala suggests searching for it through a company’s website.

‘If you want to download a specific application, visit the official website of the application, service or company using your browser. Typically, they have linked the official versions of the app on their site,” he said.

On Android devices, you will also have the option to run an antivirus or mobile security scan, if you think you may have installed a malicious app.

If you have downloaded an app, Kankaala also warned that you should review the permissions that have been given to the app on your device and make sure they are not excessive.

She added: “These permissions could be access to your contact lists, text messages, images, location – you can turn off any suspicious app permissions and turn them back on if the app stops working without them.” There are some very dangerous permissions on Android devices, such as Device Manager or Notification Listener, that are not required unless the app needs them to function.

‘Accessibility features should be used to help people with disabilities use apps on their phones, but unfortunately these permissions are routinely misused to steal data… Accessibility features can also generate touches on behalf of users , to carry out potentially risky and unwanted operations. how to install additional applications.’

Best Tip: In general, it is recommended to avoid using unofficial app stores to download software.

Although Google and Apple try to remove fraudulent applications from their platforms, it is advisable to always be vigilant and not take the security of these app stores for granted.

Kankaala said: “There is constant monitoring for malicious applications, but unfortunately, it is a game of cat and mouse.”

‘Real malware, malicious applications, constantly look for ways to bypass security mechanisms and protections in place. And sometimes the apps aren’t “malicious” per se, but instead are involved in shady practices, hoping that people will, for example, enable a weekly subscription and forget about it.’

Should I check the reviews?

Leaving a bad review is an easy way to let others know that you’ve had a bad experience with an app.

However, more often than not, unreliable apps have plenty of five-star reviews to counteract the negative ones.

Kankaala said: ‘Fake reviews and stars are used to improve the app and make it appear closer to the top of search results when people search for apps. People are more likely to download the app if it appears to have a lot of reviews and stars.

“This same tactic can be used by malicious or deceptive applications: they want to boost them so that people end up downloading them, instead of legitimate applications.”

For scammers, racking up a horde of good reviews to offset the bad ones is as simple as making a quick purchase, making it harder than ever for consumers to get a true picture of the app they’re downloading.

‘It’s very easy and affordable to buy reviews and stars for an app. “There are many different websites and platforms that claim to write reviews in multiple languages, and their prices range from app conversion rates (or how many installs the reviews generate) to wholesale prices,” he said.

“My rule of thumb is to look at negative reviews and take them more seriously than positive ones, because they could expose some fraudulent or deceptive app behavior.”

What risks can these applications pose?

The main risk is allowing an app to collect your data, which can then be sold or used for marketing purposes.

Apps that install malware on your device can be used to steal sensitive data that they cannot legally collect.

Kankaala said: “The data that the criminals behind the malware want to steal are credentials, credit card information, multi-factor authentication tokens, etc. The malware can have real financial consequences for the victim.

“Lastly, unwanted subscriptions are (a popular method used by scammers) and can lead to financial loss if not addressed in time.”

If you think you’re being charged for a subscription you don’t think you signed up for, it may not be too late to get your money back.

Kankaala added: ‘Check your subscriptions and cancel any you are not actively using. If you have been scammed or, for example, your child accidentally subscribed to an app, you can try to get a refund from the app store.

‘It should be noted that if you simply delete the app, the subscription may still remain active and billing will continue. The creators of these deceptive apps hope that people forget to unsubscribe.’

Some links in this article may be affiliate links. If you click on them, we may earn a small commission. That helps us fund This Is Money and keep it free to use. We do not write articles to promote products. We do not allow any commercial relationship to affect our editorial independence.