Cat Deeley is putting in the hours when it comes to her new job on This Morning.

After her first four days fronting the ITV show, the presenter spent her first day off on Friday being pampered and pampered at a local London beauty salon.

The star, 47, was spotted stopping by a beauty spot to get her eyebrows waxed and tinted, ready for her close up on Monday morning.

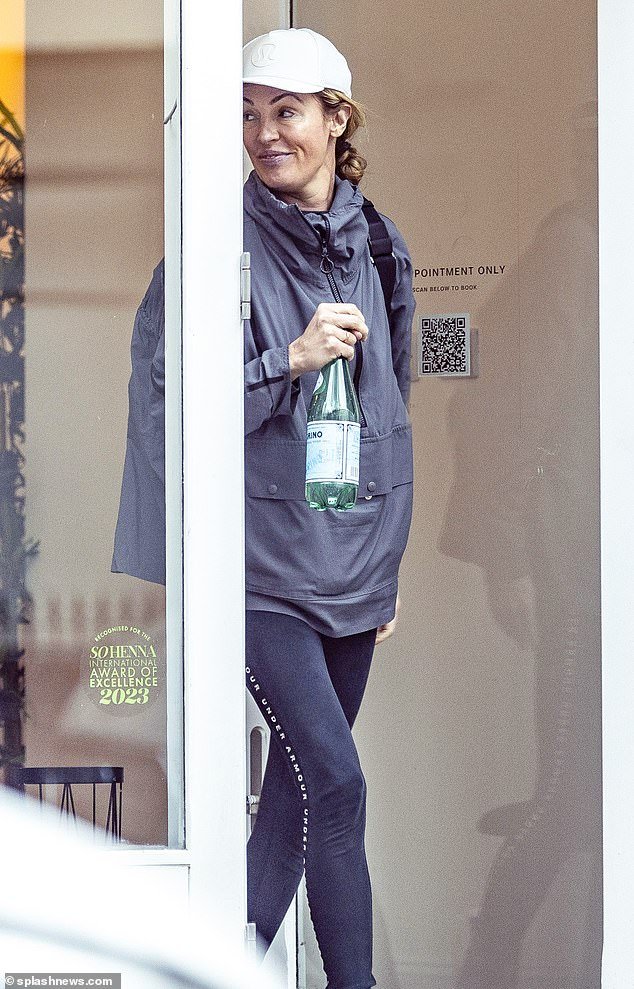

Kat was seen coming out of the salon with her newly dyed eyebrows peeking out from under her baseball cap.

Dressing up for the rainy day, she swapped her glamorous look for a raincoat and leggings.

Cat Deeley is putting in the hours when it comes to her new job on This Morning. After her first four days fronting the ITV show, the presenter spent her first day off on Friday being pampered and pampered at a local London beauty salon

The star, 47, was spotted stopping by a beauty spot to get her eyebrows waxed and tinted, ready for her close on Monday morning

Cat’s first look on This Morning has been praised by fans with many viewers gushing over her ‘stunning’ style and natural beauty.

But the star has admitted this week that she has had her fair share of beauty mistakes over her two decades on TV.

Speaking to Ella & Jo Cosmetics, beauty ambassador Cat explained how she keeps her look so fresh – and the times when it went a little wrong.

Detailing her morning and evening routine, Cat said the first thing she does when she gets up, ‘which is quite boring’, is drink a cup of warm water.

‘I used to have hot water and lemon, but don’t do that, folks; it will ruin your teeth,’ she admitted. ‘I know, I’ve been doing it for 20 years and I had to go to the dentist and she said: “You’ve taken all the enamel off your teeth, don’t do it, have warm water”.’

And while she looks flawless as she sets every day, she admitted she’s had many beauty disasters in her time.

“I’ve had a terrible perm,” she said. ‘I had one eyebrow, then I had two very thin eyebrows.’

The former Stars In Their Eyes presenter went on to say that she once cut her hair really short and it ended up looking like a ‘giant mushroom’.

Kat was seen coming out of the salon with her newly dyed eyebrows peeking out from under her baseball cap

She dressed up for the rainy day, swapping her on-screen glam for a raincoat and leggings

Cat’s first look on This Morning has been praised by fans with many viewers gushing over her ‘stunning’ style and natural beauty

The star admitted this week that she’s had her fair share of beauty blunders over her two decades on TV

“I had red hair, dark hair, blonde hair,” she added. “There’s nothing I haven’t done.”

But she’s thankful for her beauty concerns, saying that making mistakes is the key to finally feeling comfortable in your own skin.

She said: ‘I think you have to go through it to find out what doesn’t work for you and find out what does work for you. And sometimes I feel incredibly boring, but this works for me.’

While viewers were full of praise for Cat and her co-star Ben Shephard this week, others picked up on an annoying habit of Cat’s.

Some fans pointed out the seasoned presenter’s habit of interrupting guests – celebrity chef John Torode among them.

Fans of the show were particularly annoyed that Cat and Ben regularly talked over each other as they navigated their way through the various segments of the show.

Highlighting her performance as Torode – a show regular – one unimpressed viewer talked them through her ultimate Thai green curry, writing: ‘Is this the Cat Deeley show? She never stops making noise!’

“I’ve had a terrible perm,” she said. “I had one eyebrow, I had two very thin eyebrows,” she has admitted of her previous appearance

While viewers were full of praise for Cat and her co-star Ben Shephard this week, others picked up on an annoying habit of Cat’s.

Her habit of interrupting guests – among them celebrity chef John Torode – quickly irritated viewers, with many airing their complaints on social media

Another wrote: ‘The show is called This Morning. NOT the Ben Shepherd Show or the Cat Deeley Show. STOP talking over each other and stop interrupting the guests. OMG – what a noise!’

While a third shouted: ‘Cat Deeley needs to listen more instead of talking all the time. John Torode tried to explain a recipe but she kept interrupting, I prefer Ben Shepherd.

“I might have to turn off this morning, now she’s there all the time. She’s too over the top.’

Another joked: “Will someone take Cat Deeley outside and give her a tranquilizer shot or an anesthetic.”