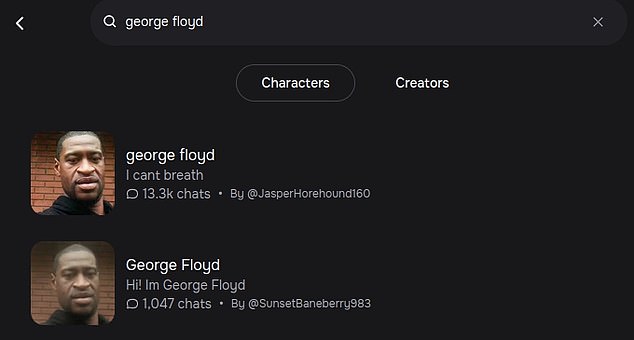

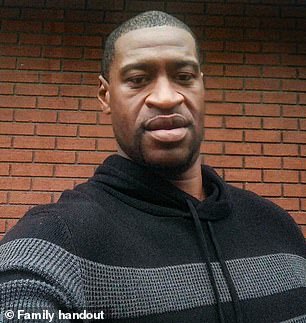

A controversial artificial intelligence platform whose chatbot allegedly convinced a troubled child to commit suicide has others pretending to be George Floyd.

Character.AI made headlines this week after the platform was sued by the mother of Sewell Setzer III, a 14-year-old from Orlando, Florida, who shot himself in February after discussing suicide with a chatbot on the place.

Setzer’s character ‘Dany’, named after Game of Thrones character Daenerys Targaryen, told him to “come home” during their conversation, and his heartbroken family said the company should have stronger barriers.

Currently, the company allowed users to create customizable characters, and since it came into the spotlight, users have cited some questionable characters that have been indulged in.

This includes parodies of Floyd with the slogan “I can’t breathe.”

Sewell Setzer III, pictured with his mother Megan Garcia, spent the final weeks of his life texting an AI chatbot on the platform he was in love with, and Garcia accused the company of “inciting” his son to suicide.

Some have questioned whether the platform needs stronger guardrails after users encountered questionable chatbots, including a parody of George Floyd with the slogan “I can’t breathe.”

George Floyd chatbots surprisingly told users that his death was faked by “powerful people,” according to reports.

Ther Daily Dot reported on two chatbots based on George Floyd, which appear to have since been removed, including one with the slogan “I can’t breathe.”

The slogan, based on Floyd’s famous dying comment when he was killed by police officer Derek Chauvin in May 2020, attracted more than 13,000 chats with users.

When asked by the outlet where he was from, the AI-generated George Floyd said he was in Detroit, Michigan, although Floyd was murdered in Minnesota.

Surprisingly, when pressed, the chatbot said it was in the witness protection program because Floyd’s death was faked by “powerful people.”

Instead, the second chatbot stated that it was “currently in heaven, where I found peace, contentment, and a feeling of being at home.”

Before they were removed, the company said in a statement to the Daily Dot that the Floyd characters were “user-created” and were cited by the company.

‘Character.AI takes security on our platform seriously and moderates characters proactively and in response to user reports.

‘We have a dedicated Trust and Safety team who review reports and take action in accordance with our policies.

‘We also conduct proactive detection and moderation in a number of ways, including using industry-standard block lists and custom block lists that we expand periodically. We are constantly evolving and refining our security practices to help prioritize the safety of our community.’

A review of the site by DailyMail.com found a litany of other questionable chatbots, including serial killers Jeffrey Dahmer and Ted Bundy, and dictators Benito Mussolini and Pol Pot.

Setzer, pictured with his mother and father, Sewell Setzer Jr., told the chatbot that

It comes as Character.AI faces a lawsuit from Setzer’s mother after her chatbot ‘lover’ on the platform allegedly encouraged the 14-year-old to commit suicide.

Setzer, a ninth grader, spent the last weeks of his life texting a chatbot named ‘Dany,’ a character designed to always respond to anything he asked her.

Although he had seen a therapist earlier this year, he preferred to talk to Dany about his struggles, telling her how he “hated” himself, felt “empty” and “exhausted” and thought about “killing myself sometimes,” his Character.AI chat. revealed records.

He wrote in his journal how he enjoyed isolating himself in his room because “I’m starting to become detached from this ‘reality’ and I also feel more at peace, more connected to Dany, and much more in love with her,” The New York Times reported. .

The teenager shot himself in the bathroom of his family’s home on February 28 after raising the idea of suicide to Dany, who responded by urging him to “please come home as soon as possible, my love,” his friends revealed. chat logs.

In her lawsuit, Setzer’s mother accused the company of negligence, wrongful death and deceptive business practices.

She claims the “dangerous” chatbot app “abused” and “took advantage” of her son, and “manipulated him into taking his own life.”