Boxing legend Lennox Lewis has spoken out on the shocking news that his old nemesis Mike Tyson will face YouTuber Jake Paul in an exhibition this summer.

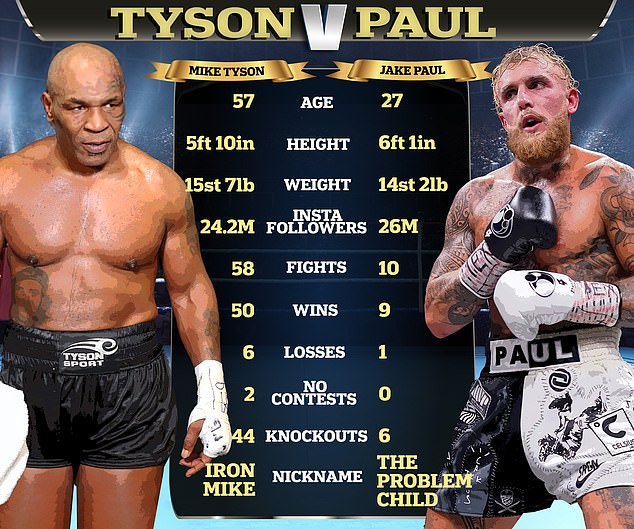

Tyson, 57, and Paul, 27, stunned fans with the announcement that they would fight in Dallas on July 20 in an exhibition broadcast live on Netflix, despite a 30-year age difference between them. .

The shock showdown, which will take place at the 80,000-capacity AT&T Stadium in Texas, has sparked a flurry of criticism from fans and boxing figures alike, with Eddie Hearn among the skeptics after calling the event “really sad.” for sport.

Tyson himself defended the fight and lashed out at “envious” critics, stating that very few people could fill an 80,000-capacity stadium at his age.

And Lewis, who fought Tyson in 2002, knocking him out in the eighth round to retain his three world title belts, has now broken his silence on the clash after questioning whether it was good for boxing.

Boxing legend Lennox Lewis has broken his silence on Mike Tyson’s fight with Jake Paul.

The pair will face off in Dallas this summer in an exhibition fight broadcast live on Netflix.

Lewis, who knocked out Tyson in 2002, said he was “looking forward” to the fight.

But the former undisputed heavyweight king insists he is actually in favor of the fight and is excited to tune in.

He added that the fact that the fight was an exhibition and not a professional fight was a good thing, otherwise he would have feared for Paul’s safety.

“It’s going to be a great fight, I’m looking forward to it,” Lewis said. TMZ.

‘No [I’m not upset about it not being a professional bout], because I would feel really bad for Jake Paul. I don’t want him to get hurt.’

In addition to Hearn’s criticism of the fight, UFC star Conor McGregor also confirmed that he is not a fan of the matchup.

The fight has received great criticism with a 30-year age difference between the fighters.

“Oh God,” the Irishman said when asked what he thought about the fight – via happy hit.

‘It’s a bit strange, you know? My interest is low. I don’t know. I don’t understand.

‘I wish Mike the best [Tyson].’

Even though Paul had much less experience, he said he was confident of a knockout when he announced the fight.

“It’s time to put Iron Mike to sleep,” he said.

“My goal is to become world champion and now I have the opportunity to prove myself against the greatest heavyweight champion of all time, the baddest man on the planet and the most dangerous boxer of all time. This will be the fight of my life”.

The high-profile fight will take place without a helmet, and Tyson is the heavy favorite to win.

Meanwhile, Tyson admitted he was “scared to death” but insisted fans would witness a “real fight” despite it being an exhibition.

“This is a fight,” he told Fox News. ‘I don’t think he’s faster than me. I’ve seen a YouTube video of him at 16 dancing weirdly. That’s not the guy I’m fighting. This is a guy who’s going to try to hurt me, like I’m used to, and he’s going to mess up a lot.’

When asked by the news anchor if Tyson would “kick his ass,” the hall of fame boxer was optimistic but said he was still “scared to death” ahead of the summer fight.

“Right now I’m scared to death,” he added. “As the fight gets closer, the less nervous I get because it’s reality, and I’m actually invincible.”