Ariana Grande asked her fans to stop sending hate to their loved ones following the release of her new album Eternal Sunshine.

On Saturday, the 30-year-old pop star took to her Instagram Stories to address the nasty messages people have been sending to “the people in (her) life” following fan speculation about cryptic lyrics in the songs of his new album.

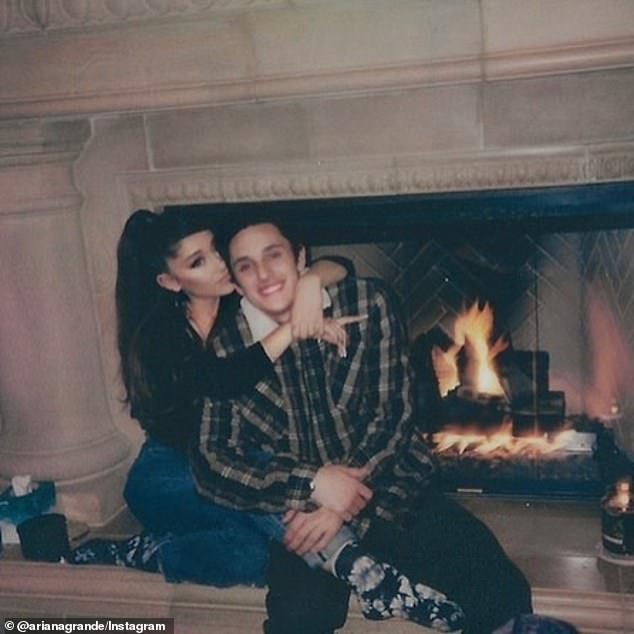

The singer also appeared to be speaking out about the drama specifically surrounding her title track, the lyrics of which sparked accusations from fans that her ex-husband Dalton Gomez may have been unfaithful.

In response to the rumors, she strongly criticized those people who write and send “hate messages” simply “based on their interpretation of this album.”

The Yes, and? The hitmaker added that doing so is “also completely misunderstanding the intention behind the music.”

Ariana Grande asked her fans to stop sending hate to their loved ones following the release of her new album Eternal Sunshine

On Saturday, the 30-year-old pop star took to her Instagram Stories to address the nasty messages people have been sending to “the people in (her) life.”

The singer also appeared to be speaking out about the drama specifically surrounding her title track, the lyrics of which sparked accusations that her ex-husband Dalton Gomez may have been unfaithful.

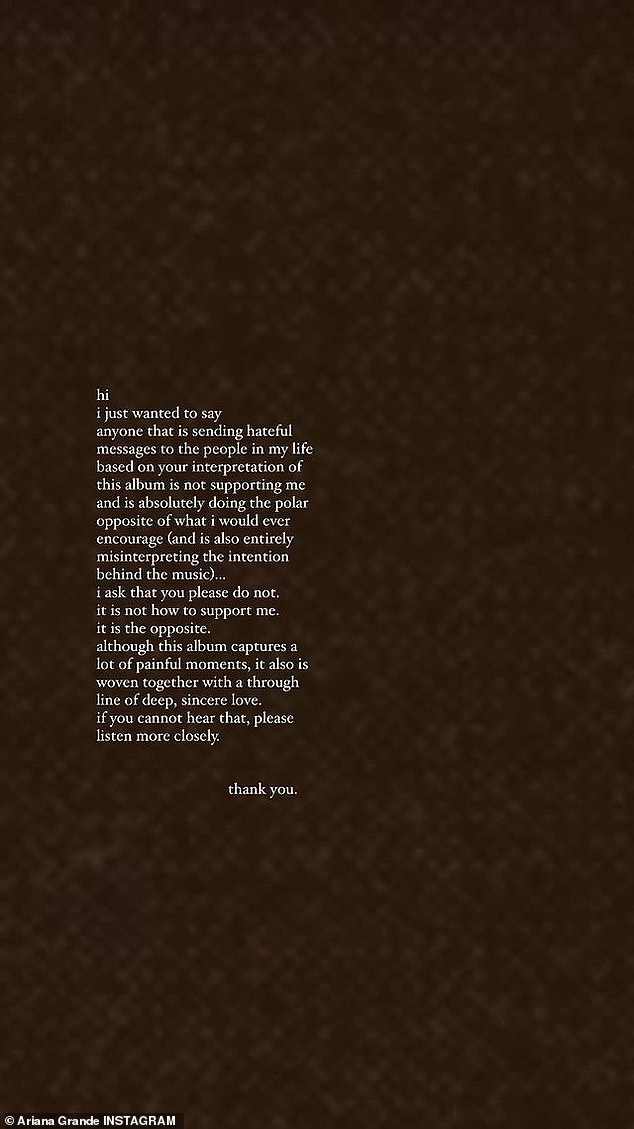

“I just wanted to say that anyone who is sending hateful messages to the people in my life based on their interpretation of this album is not supporting me and is doing the absolute opposite of what I would encourage,” he began.

‘I ask you to please not do it. It’s not about how to support me,” she continued. “It’s quite the opposite.”

“Although this album captures many painful moments, it is also interwoven with a line of deep and sincere love,” he wrote.

“If you can’t hear that, listen more closely,” he concluded. ‘Thank you.’

The two-time Grammy winner followed up with a similar post featuring a blank background and white text that read, ‘Thank you! I love you!!!!!!!’

Ahead of the release of her seventh studio album, the singer faced backlash from trolls over her new relationship with her Wicked co-star Ethan Slater.

News had just broken of his separation from Gomez in early 2023 and around the same time, Slater filed for divorce from his then-wife Lilly Jay, with whom he had just welcomed a baby.

In a recent 2023 recap post, she said she felt “deeply misunderstood” over the past year, seemingly alluding to allegations surrounding her divorce and new relationship.

In response to the rumors, she strongly criticized those people who write and send “hate messages” simply “based on their interpretation of this album.” The Yes, and? The hitmaker added that doing so is “also completely misunderstanding the intention behind the music.”

The two-time Grammy winner followed up with a similar post featuring a blank background and white text that read, ‘Thank you! I love you!!!!!!!’

Ahead of the release of her seventh studio album, the singer faced backlash from trolls over her new relationship with her Wicked co-star Ethan Slater. News had just broken of his separation from Gomez in early 2023 and around the same time Slater filed for divorce from his then-wife Lilly Jay, with whom he had just welcomed a baby.

In a recent 2023 recap post, she said she felt “deeply misunderstood” over the past year, seemingly alluding to allegations surrounding her divorce and new relationship.

Grande and Slater’s romance was the subject of criticism as some people accused them of infidelity. Later, she spoke directly to her first single from her new album: Yes, And? Despite the drama, her split from Gomez and the beginning of her relationship with her current boyfriend Slater, she said that last year was “one of the most transformative, most challenging, and yet happiest and special years of my life.” .

Grande and Slater’s romance was the subject of criticism as some people accused them of infidelity.

Later, he spoke directly to his first single from his new album: Yes, And?

Despite the drama, her split from Gomez and the beginning of her relationship with her current boyfriend Slater, she said that last year was “one of the most transformative, most challenging, and yet happiest and special years of my life.” .

In her extensive reflection on what the year 2023 was like for her, she said that “there were so many beautiful and yet polarized feelings.”