- In 2024, 28 kg of plastic waste will be generated per person worldwide

- More than a third will be poorly managed: 68.6 million tons of plastic

<!–

<!–

<!– <!–

<!–

<!–

<!–

This year alone, some 220 million tons of plastic waste will be generated, the equivalent of 20,000 Eiffel Towers, warns a new report.

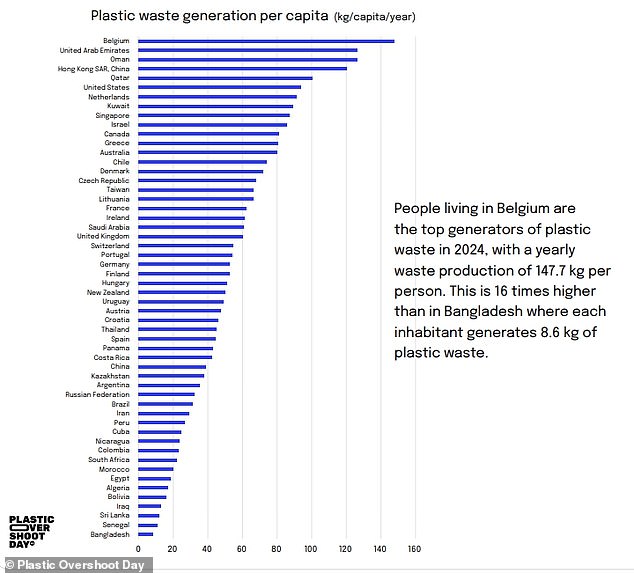

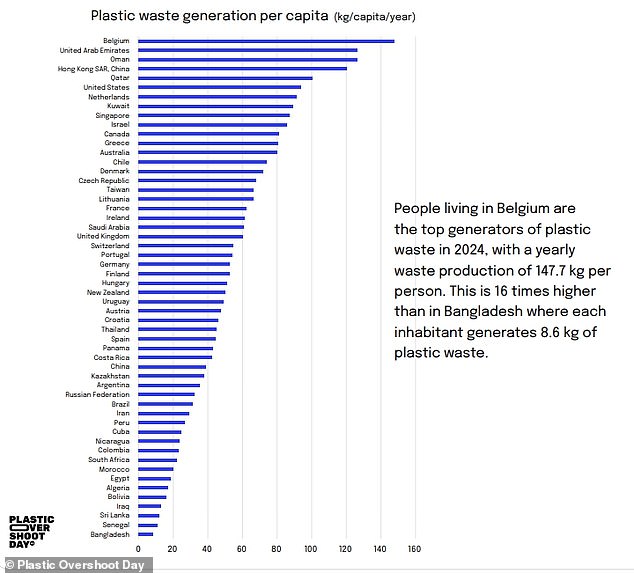

Charity EA Earth Action has published its annual study showing that 28kg of plastic waste per person will be generated globally by 2024.

They predict that more than a third will be mismanaged at the end of their lives.

The worrying thing is that this equates to a whopping 68.6 million tonnes of plastic ending up in landfills.

This includes plastic packaging, plastic in textiles and plastic in household waste.

Charity EA Earth Action has published its annual study showing that 28kg of plastic waste per person will be generated globally by 2024.

Charity EA Earth Action has published its annual study showing that globally 28kg of plastic waste will be generated per person in 2024. People living in Belgium are the main generators of plastic.

The report also estimates Excess Plastic Day, which is the moment when the amount of plastic waste generated exceeds the world’s capacity to manage it.

This year, they expect it to land on September 5.

Researchers also warned that, as of this month, nearly 50 percent of the world’s population lives in areas where the amount of plastic waste generated has already exceeded the capacity to manage it.

This figure will rise to 66 per cent by Excess Plastic Day, they said.

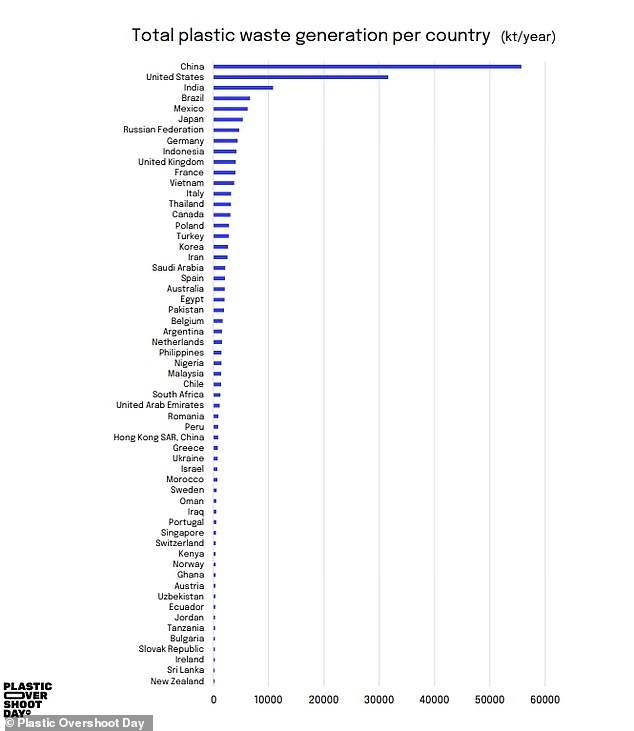

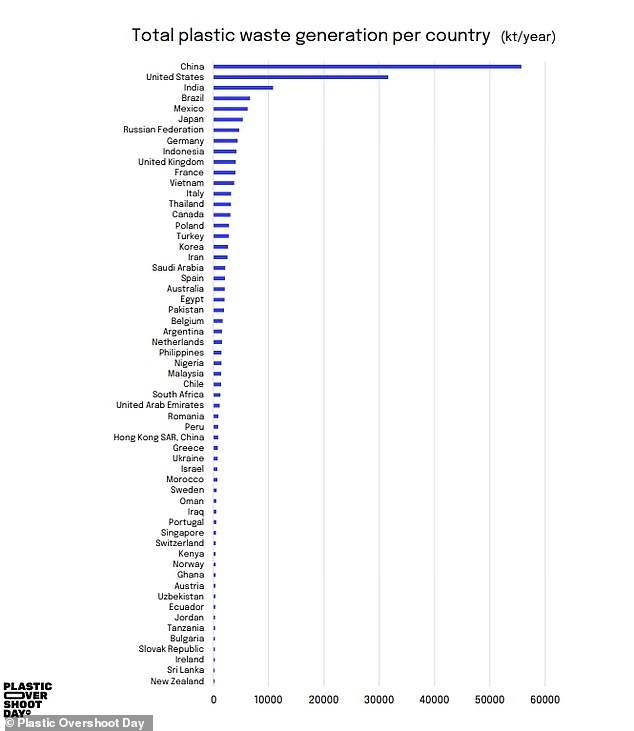

Just 12 countries are responsible for 60 percent of the world’s mismanaged plastic waste, they added, with the top five being China, the United States, India, Brazil and Mexico.

This year alone, some 220 million tons of plastic waste will be generated, the equivalent of 20,000 Eiffel Towers.

Researchers also warned that, as of this month, nearly 50 percent of the world’s population lives in areas where the amount of plastic waste generated has already exceeded the capacity to manage it. China is the main generator of plastic waste

Sarah Perreard, co-chief executive of EA Earth Action, said: ‘The findings are unequivocal; Improvements in waste management capacity are outpaced by increased plastic production, making progress almost invisible.

“The assumption that recycling and waste management capacity will solve the plastic crisis is wrong.”

Sian Sutherland, co-founder of A Plastic Planet, said: “After scientists have been sounding the alarm for decades, it is now clear for all to see that plastic pollution has put humanity on the path to an ecological and humanitarian disaster. .

“This year we have a narrow window of opportunity to create a global Plastics Treaty that protects not only our ocean, our air, our soil, but also our own children.”

The report was released ahead of the fourth round of negotiations for a UN Global Plastics Treaty taking place in Ottawa, Canada, later this month.

It recommends strategies such as reducing plastic consumption and use, promoting repair and reuse initiatives, improving local waste management infrastructure, and stopping importing plastic waste from other countries.