Tesla has been forced to cut prices in the US and around the world just days after it said it would lay off 10% of its global workforce, as it battles falling sales and increased market competition. of electric vehicles.

Car prices in the United States, Germany and China were reduced over the weekend, and prices for custom-made Tesla software were also reduced in the United States.

The cuts come after Tesla, led by CEO Elon Musk, reported the first drop in global vehicle deliveries for the first time in four years.

In the United States, prices for Model X, Y and S vehicles were reduced by $2,000 on Friday. Just one day later, Tesla reduced the price of its self-driving software from $12,000 to $8,000 in the United States.

In China, one of the world’s largest car markets, the Model 3 was reduced by 14,000 yuan ($1,930) to 231,900 yuan ($32,000), its official website showed on Sunday.

The cuts come after Tesla, led by CEO Elon Musk (pictured), reported the first drop in global vehicle deliveries for the first time in four years.

Car prices in the US, Germany and China were slashed over the weekend, and prices for custom-made Tesla software were also slashed in the US.

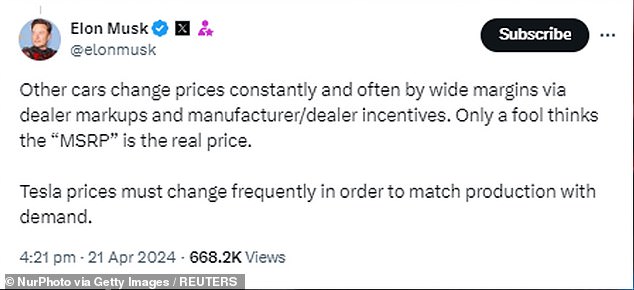

Elon Musk hinted that more price changes will soon be on the horizon

In Germany, the price of the rear-wheel drive Model 3 was reduced from 42,990 euros to 40,990 euros ($43,670.75). Many other countries in Europe, the Middle East and Africa also saw Tesla price cuts.

Elon Musk hinted that more price changes could soon be on the horizon, posting on X: “Tesla prices must change frequently to match production with demand.”

But the latest round of price cuts comes just a week after Musk revealed he would lay off 10% of his company’s global workforce, leaving 14,000 people unemployed.

Musk’s memo said that as Tesla prepares for its next phase of growth, “it is extremely important to examine all aspects of the company to reduce costs and increase productivity.”

Also on Monday, two key Tesla executives announced on social media platform X that they are leaving the company.

Andrew Baglino, senior vice president of powertrain and power engineering, wrote that he had made the decision to leave after 18 years with the company.

Rohan Patel, senior global director of public policy and business development, also wrote on X that he would be leaving Tesla after eight years.

Baglino, who held several top engineering positions at the company and was chief technology officer, wrote that the decision to leave was difficult. “I loved tackling almost every problem we solved as a team and am pleased to have contributed to the mission of accelerating the transition to sustainable energy,” he wrote.

An electric pickup truck is pictured at the Rivian Automotive facility in Costa Mesa, California.

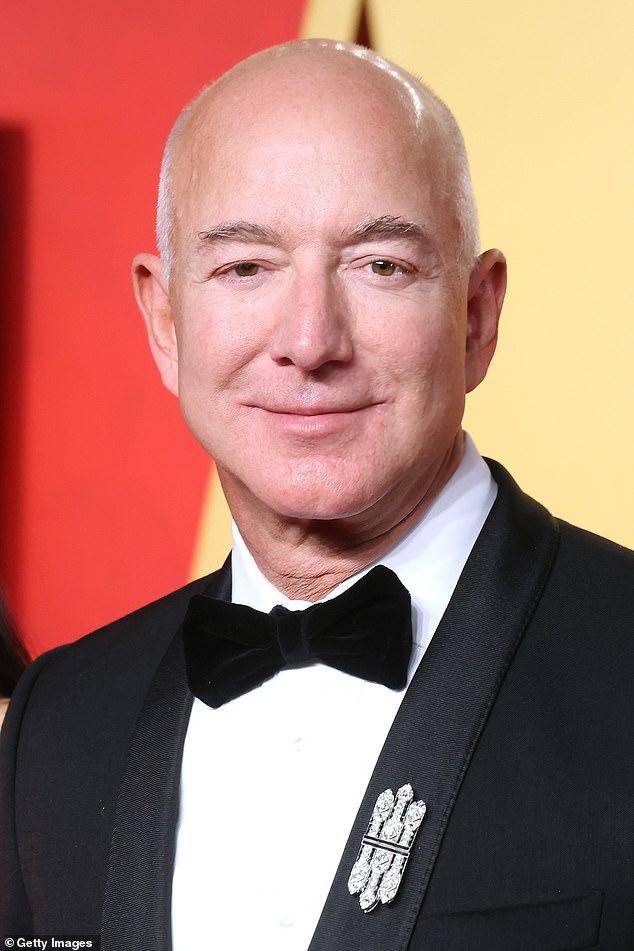

Jeff Bezos (pictured) has invested $700 million in Rivian

Tesla reduced the price of its ‘Full Self Driving’ system by approximately a third

The Tesla Model 3 is displayed during an event a day before the official opening of the 2023 IAA Mobility Munich Motor Show.

He has no concrete plans beyond spending more time with his family and young children, but he wrote that he finds it difficult to sit still for long.

Musk thanked Baglino in response. “Few have contributed as much as you,” he wrote.

Since the beginning of the year, Tesla’s share price has fallen almost 41%.

But Tesla is not the only electric vehicle manufacturer facing financial problems.

Rivian, backed by Jeff Bezos, is planning a second round of layoffs this year, amid a major drop in demand for electric vehicles.

In February it cut 10% of its 16,790 employees in North America and Europe and now plans to lay off another 1 percent.

This is despite the company’s sales doubling in 2023. Shares of publicly traded Rivian have fallen almost 60% since the beginning of the year.

Amazon founder Bezos invested $700 million in the automaker, making him the largest shareholder, and Amazon owns a 17% stake in the company.

Electric vehicle manufacturers are facing lower-than-expected demand and are having to reduce production to match it.

In October, General Motors abandoned its plans to have 400,000 hybrid vehicles ready for sale by mid-2024, even though sales of electric vehicles increased quarter-on-quarter over the past year. Ford also announced a slowdown in production.

It’s not just American electric vehicle companies that are facing the crisis. China’s BYD reported dramatic drops in sales last year, with a 42% drop in the final quarter of 2023 compared to 2022.