Roman Reigns’ 1,316-day championship reign finally came to an end thanks to Cody Rhodes, who in turn finally ended his storyline.

There were three more new world champions crowned that night, including two in the first two matches.

We were also treated to a series of legendary main event returns that helped create the greatest babyface highlights in WWE history.

Logan Paul also showed up the night he fought two future Hall of Famers and did some great marketing for PRIME.

So, let’s get to the results:

WWE World Heavyweight Title: Drew McIntyre defeated. Seth Rollins (c)

Drew McIntyre achieved his victory in front of the fans, but he couldn’t enjoy it for long

With the pace these two started at, it was obvious we were putting together a world class sprint and they did not disappoint.

Both men unloaded their arsenal starting with a Claymore in the opening seconds of the fight.

McInyre would need four Claymores to get the job done, but he eventually managed to win the title in front of the fans.

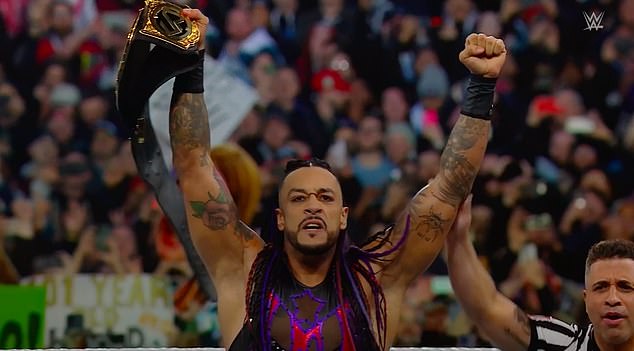

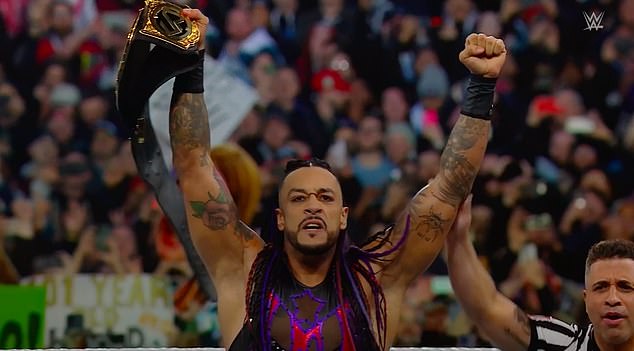

WWE World Heavyweight Title: Damien Priest def. Drew McIntyre (c)

Damien Priest had been awfully quiet with his briefcase, but he made it count.

Remember how we said McIntyre won the title in front of the fans? Well, minutes later, he discovered what it’s like to lose control in front of them too.

The Scotsman was gloating too much when CM Punk, who was on commentary, finally snapped. He attacked McIntyre with the brace on his arm and then Damien Priest’s music played.

The Judgment Day man ran to the ring, cashed in his Money in the Bank briefcase, hit a chokeslam and secured his first world title reign.

Philadelphia Street Fight: The Pride def. The final testament

The Pride scored an entertaining victory with ECW legend Bubba Ray Dudley as the referee.

This was an entertaining affair from start to finish. Bubba Ray Dudley was announced as the surprise special guest referee and rap legend Snoop Dogg appeared as the special guest commentator.

Kendo sticks and tables dominated the action. After a rough start, The Pride (Bobby Lashley and The Street Profits) took the advantage and isolated Karrion Kross.

The first table Kross was placed on broke before Montez Ford could climb the top rope. But the second time, Ford hit the Frog Splash and secured the victory for his team.

LA Knight defeated. AJ Styles

LA Knight scored a big win in his first WrestleMania with AJ Styles doing an excellent job

Except for a period of control in the middle of the match, AJ Styles showed LA Knight in this first WrestleMania contest and he did a very good job.

The crowd was raving about Knight and Styles, a man who keeps mentioning retirement, used his legacy to give one of WWE’s most popular stars a big massage.

It was another decent sprint of a match. Knight won with Blunt Force Trauma, but Styles tried to give fans some of his biggest hits, including a 450 splash.

United States Championship: Logan Paul (c) def. Randy Orton and Kevin Owens

Logan Paul got a big win over two pro wrestling legends, but PRIME won more

This was a triple threat that had many classic songs mixed with the freshness of Logan Paul which was a good recipe for the night.

Paul made his entrance in a huge PRIME truck and arrived with a mascot to the ring, the question was who was in the suit? It turned out to be IShowSpeed, and he got an RKO on the announcers’ table for his trouble.

Down the stretch, Orton and Owens hit the RKO and Stunner before Orton turned a pop-up powerbomb into an RKO. Paul took advantage of this to knock Orton out of the ring, perform a frog splash and secure the victory.

SmackDown Women’s Championship: Bayley def. HEAVEN IYO (c)

Bayley had a long-awaited WrestleMania moment after 11 years in WWE

Bayley finally got the WrestleMania moment she deserved when she dethroned her traitorous best friend, IYO SKY.

After a few years of losing on the showpiece and another where she was simply the host, Bayley got the long-story treatment with Damage CTRL and took that story to the end.

The match itself was better than the construction that was given to it. Bayley had to battle a bad knee to overcome SKY, one of the most underrated champions on the roster. SKY performed an impressive counterattack on Rose Plant before that same move proved to be his demise.

Undisputed Universal World Heavyweight Championship: Cody Rhodes defeated. Roman reigns (c)

The locker room emptied to celebrate Cody Rhodes’ first world title.

Two huge milestones emerge from this stellar main event. One, Cody Rhodes finally finished his story and, in many ways, just started a new one. Two, Roman Reigns and his epic 1,316-day reign has finally come to an end and, in time, he will be celebrated for how truly great he was.

The action started slow as expected before Reigns took control of the majority. The two men traded spikes and last-second kicks on several occasions before the antics really got underway.

Jimmy Uso interfered. Jey Uso came out to deal with him. Only Sikoa interfered. John Cena came out to deal with him. The Rock interfered. Seth Rollins attempted to interfere with his former Shield team. The Undertaker came out to confront him. And yes, he was as awesome as they come with those names involved.

After all the chaos, Cody managed to get three consecutive Rhodes Crosses to close the book on Roman’s reign and finally finish his story.