More than 600 hospitals serving millions of rural Americans are at risk of closing, according to a new report that prompted experts to “sound the alarm.”

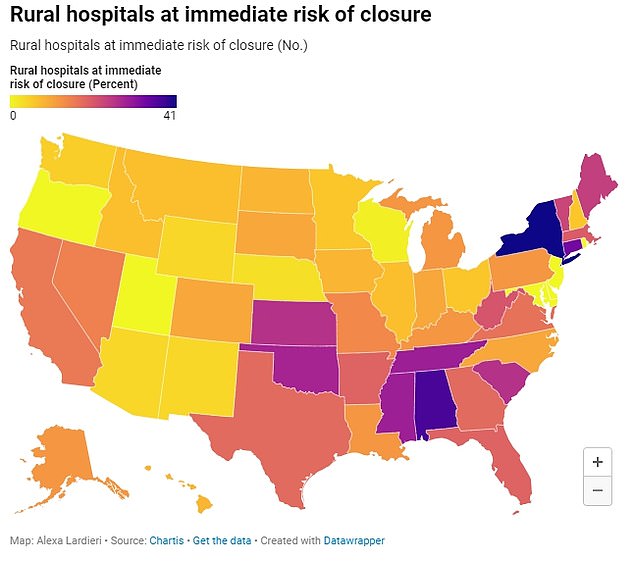

More than half of those hospitals are at risk of being closed immediately.

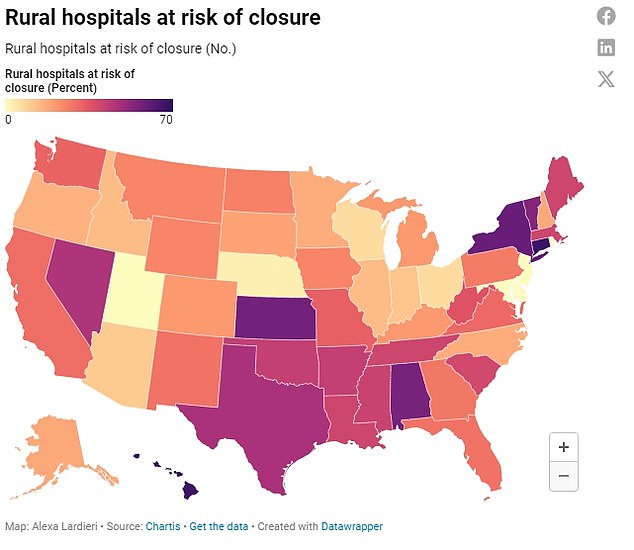

There are rural hospitals at risk of closing in almost every state, but higher numbers are seen in the Southeast and Northeast.

Facilities are at risk because they face much greater financial challenges in treating patients and providing care due to staffing shortages and lower payments from health insurers.

An analysis by chartisa Chicago health care advisory firm, found that the state most at risk for closures was Texas, which could see 75 of its rural hospitals closed (47 percent) and 28 hospitals (18 percent) at risk of immediate closure.

There are approximately 2,200 rural hospitals across the United States, on which an estimated 60 million Americans depend for basic health care.

In more than half of the states, 25 percent or more are at risk, and in 15 states, nearly half of rural hospitals are at risk.

In five states (Utah, Rhode Island, New Jersey, Maryland and Delaware) no rural hospitals are at risk of closure.

After Texas, Kansas is at risk of losing 58 rural hospitals (57 percent), and 27, or 26 percent, are at risk of being immediately forced to close operations.

Kansas currently has 102 rural hospitals and 911,000 residents are considered to live in rural areas.

Oklahoma is at risk of losing 33 rural hospitals (42 percent) with nearly two dozen (28 percent) at immediate risk.

Over the past 20 years, national spending on hospital services has tripled and is expected to continue rising even faster in the coming years due to inflation, supply chain issues and staff shortages.

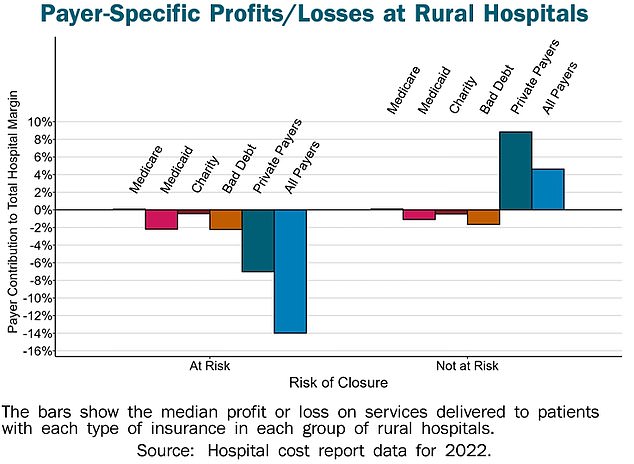

The report found that the main reason hospitals are at risk of closing is because private insurance plans pay them less than it costs to treat patients, leading rural institutions to lose money on their services. the patients.

They also lose money on Medicaid patients and patients who don’t have health insurance.

Rural hospitals also have lower financial reserves than urban hospitals and cannot offset the losses they face. The percentage of rural U.S. hospitals operating at a loss is now 50 percent, an increase of 43 percent from last year.

Additionally, any hospital care that began during the Covid pandemic has been stopped. Because of that financial assistance during the pandemic, there were not as many rural hospital closures in 2021 and 2022 as there were in 2019.

While 18 hospitals closed during the pandemic in 2020, researchers attributed this to financial problems that facilities were already experiencing before Covid.

The biggest area where rural hospitals are losing money is to private insurers. They also lose money on Medicaid patients and patients who don’t have health insurance.

The Chartis analysis did not specify which hospitals are at risk, but data of Saving Rural Hospitals, administered by the Center for Healthcare Quality and Payment Reform, shows that there are hundreds of hospitals operating at financial losses.

In Texas, approximately 110 hospitals are losing money on health care services provided to patients, including Yoakum County Hospital, which operates with the largest deficit in the state (and the country), at -72 percent.

The second largest in the state (and country) is Cochran Memorial Hospital, with a -61 percent patient margin over the past three years.

Rounding out the top five nationwide is Welch Community Hospital in West Virginia; Thomasville Regional Medical Center in Alabama; and Reagan Memorial Hospital in Texas.

While some rural hospitals may not be at risk of closing completely, many have stopped providing some basic services.

Twenty-five percent (267) of rural facilities dropped OB/GYN services between 2011 and 2021.

And 387 stopped administering chemotherapy between 2014 and 2021.

The authors of the Chartis report said: “Significant changes must be made to both the amounts and payment methods for rural hospital services to prevent more rural hospitals from closing in the future.”

While the payments are sufficient to cover the cost of services at larger, urban facilities, those payments will not cover the costs of rural hospitals because there are far fewer patients to treat – and bill for – compared to the fixed costs of operating a hospital.

When rural hospitals close due to lack of funding, more than just access to health care is jeopardized.

Chartis researchers said: “The closure of rural hospitals threatens the country’s food supply and energy production because farms, ranches, mines, drilling sites, wind farms and solar energy facilities are located primarily in rural areas, and they will not be able to attract and retain workers if workers cannot obtain adequate health services.’

In rural areas, people face difficulties traveling or accessing healthcare due to a shortage of hospitals compared to urban areas.

Nearly two-thirds of rural hospitals are more than 20 miles from the nearest hospital and a quarter are 30 miles or more.

For urban hospitals, most are located within five miles of each other, and more than 80 percent are within 15 miles of another hospital.

The census defines “rural” as an area that has fewer than 50,000 people and classifies 19 percent of Americans (60 million people) as living in rural areas.

Small rural hospitals have been defined as those with annual expenditures less than $40 million.

Rural hospitals provide many of the same services as urban facilities, including emergency services, basic laboratory testing and imaging, as well as outpatient and inpatient services.

However, they do so at a much lower volume.

Most rural hospitals have fewer than 25 beds, compared to more than 200 beds in urban hospitals.

Half of urban hospitals have expenses of more than $250 million, but only two percent of rural hospitals operate on that amount of money.

Due to a lack of access to health care, hospitals in rural communities are often sources of primary care for residents in addition to their emergency services. The closure of a rural hospital means people will have to travel further for medical services or miss out on important basic or preventive care.