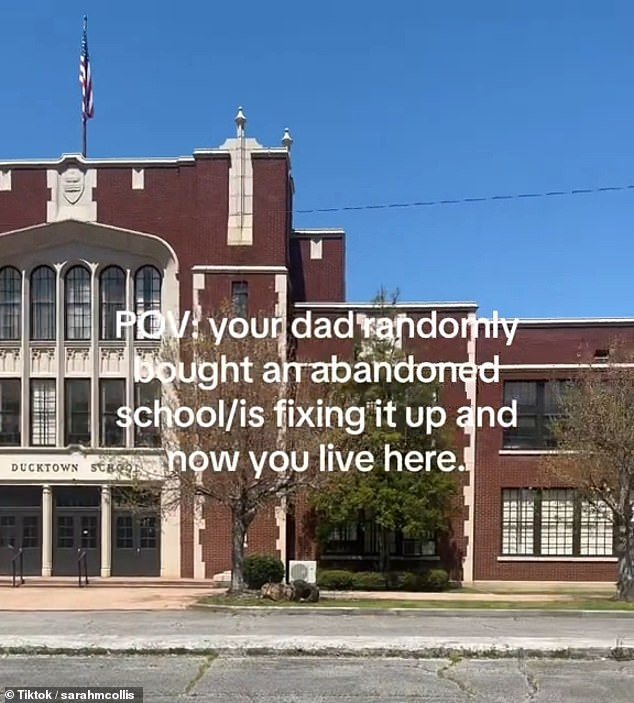

A family in Tennessee bought an old abandoned schoolhouse and is turning it into the mansion of their dreams, but the renovation project scares skeptics.

High school senior Sarah Collis uploaded a video to TikTok with the caption: “POV: Your dad randomly bought an abandoned school/he’s fixing it up and now you live here.”

The video shows the transformation of Ducktown School from a creepy, dated and run-down school to a slightly more homely and livable building.

Ducktown School was formerly called Kimsey Junior College and was built in 1933 with the original purpose of promoting education for the town’s miners.

In 2021, Jason Collis and his wife purchased the historic building and are still working to restore it, while living in the huge abandoned school.

A family in Tennessee bought an old abandoned schoolhouse and is turning it into the mansion of their dreams, but the renovation project scares skeptics.

High school senior Sarah Collis uploaded a video to TikTok with the caption: “POV: Your dad randomly bought an abandoned school/he’s fixing it up and now you live here.”

In 2021, Jason Collis, seen here with his daughter Sarah, purchased the historic building and is still working to restore it, while living in the huge abandoned school.

The school was abandoned in 2006 and suffered vandalism and neglect for years, Collis said. ABC.

Collis attended Kimsey Junior College from kindergarten to seventh grade and he, along with other members of the community, were not prepared to see the school disappear.

He said he wasn’t prepared for the work the transformation would entail, but his family is determined to turn the old building into the unique home of their dreams.

In Sarah’s most recent video, posted Wednesday, many of the school’s rooms remain intact, including the gym, auditorium, hallways and lockers, stairs and some of the classrooms.

The paint is badly peeling and the floors and walls are worn and damaged, while much of the old school furniture remains in the rooms.

Sarah insisted that the family didn’t just move in and that most of these rooms have already been fixed up.

She also shared that they hold meetings at the school and use it for the community all the time.

However, a recent clip of Sarah’s room shows some of the progress her family has made in their renovation efforts.

In Sarah’s most recent video, posted Wednesday, many of the school’s rooms remain intact, including the gym, auditorium, hallways and lockers, stairs and some of the classrooms.

The school was abandoned in 2006 and was vandalized and neglected for years.

Sarah’s spacious room is beautifully decorated and in good condition, and has apparently been updated with new flooring and paint jobs.

Your spacious room is beautifully decorated and in good repair, and has apparently been updated with new flooring and paint jobs.

Although some elements, such as the windows and baseboard, remain intact, the room overall looks much nicer than other parts of the house.

The house-turned-school features a sprawling lawn, originally a playground, surrounding the property.

In the playground there are still swings, roundabouts and rocking chairs, which create a nostalgic and slightly eerie tone for the now backyard.

TikTokers flocked to the comments to talk about the unique house, with many agreeing that the old abandoned school is creepy.

“Why does it scare me?” one user commented, while others asked for updates on renovations and room tours.

Other rooms in the school/house are much creepier than others, especially the boiler room, which Sarah posted a tour of on TikTok.

The roasting room, accessed via some very ominous stairs, is filled with rusty clutter and worn-out machinery.

Collis attended Kimsey Junior College from kindergarten to seventh grade and he, along with other members of the community, were not prepared to see the school disappear.

Sarah insisted that the family didn’t just move in and that most of these rooms have already been fixed up.

In the playground there are still swings, carousels and rocking chairs, which give a nostalgic and slightly eerie tone to the now backyard.

Collis said he wasn’t prepared for the work the transformation would entail, but his family is determined to turn the old building into the unique home of their dreams.

TikTok users were quick to point out how scary the room below is.

“Knowing that’s my basement would scare me,” one user said.

“Hide and seek would be so difficult,” another joked.

“It’s definitely haunted down there,” one user commented.