Table of Contents

Achieving the golden ratio of 10,000 steps per day has long been considered one of the best ways to keep ourselves physically and mentally healthy.

Studies have suggested that reaching a five-digit step count can reduce the risk of suffering a range of serious health problems, including dementia, type 2 diabetes and heart disease.

But walking that far at a steady pace can take about two hours, an impractical goal for many people with busy lives.

However, experts say you can get the same benefits as 10,000 steps by walking faster for shorter periods of time or exercising for less than half an hour.

Here, MailOnline highlights this story, the science of the 10,000 step goal and asks personal trainers and exercise experts if we really need to walk that amount every day…

Studies suggest the magic number may reduce the risk of developing dementia, type 2 diabetes and heart disease

Where does the “magic” number come from?

Getting 10,000 steps a day has long been considered the holy grail of regular fitness.

It is automatically connected to many pedometers, fitness watches and smartphones as the default recommended step goal.

But surprisingly, this figure is not the result of a large forensic scientific study.

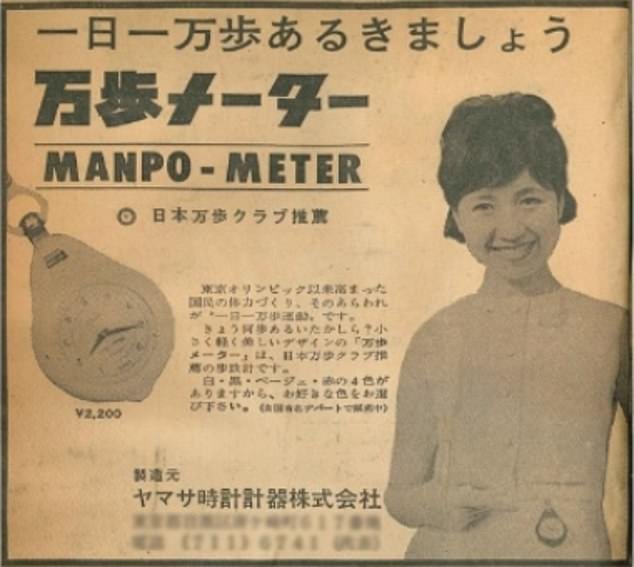

Instead, it was pulled out of nowhere as part of a clever marketing ploy by a Japanese company trying to sell pedometers 60 years ago.

The 1964 Tokyo Olympics were underway at the time, and many companies in the country were trying to take advantage of the increased focus on fitness and profit from it.

A company called Yamasa has launched a pedometer called Manpo-kei, which literally means “10,000 step meter” in Japanese.

Without foreseeing it at the time, they sparked a global fascination with this number and everyday fitness.

But this arbitrary figure was not based on any science at the time. Instead, he was chosen simply because he was a beautiful, round, memorable figure.

The 10,000 step goal originated as a clever marketing ploy by a Japanese company attempting to sell pedometers following the 1964 Tokyo Olympics. At that time, there was an increasing emphasis on fitness in the host country and businesses had attempted to capitalize on the excitement surrounding the Games (pictured, an advertisement for the original gadget).

Health Benefits of Walking 10,000 Steps

Although the initial campaign to take 10,000 steps a day was not based in science, researchers have since found that it may have merit.

In fact, since the 1964 Olympics, studies have consistently shown that 10,000 daily steps is a good approximation for anyone looking to stay healthy – and it seems far more beneficial than simply sticking to 5,000 .

Experts agree that walking every day has many health benefits.

“Increasing physical activity, such as walking steps, helps improve cardiovascular fitness, weight management, mood, sleep and cognitive function,” said physiology researcher Lindsay Bottoms. of Exercise and Health at the University of Hertfordshire, at MailOnline.

Not only is walking “simple” and “accessible”, making it a “practical” exercise for people of all ages, but it also reduces the risk of many chronic diseases, Ms Bottoms said.

It may also reduce the risk of developing chronic diseases such as dementia and certain cancers. Additionally, it can also improve existing health conditions such as diabetes and strengthen the immune system, she said.

One study found that for 2,000 steps per day, or about 15 minutes of walking, the risk of premature death decreased by between 8 and 11 percent.

But there appears to be an upper limit, with researchers finding that the health benefits of walking more than 10,000 steps were negligible.

Another study, which followed 78,500 Britons aged 40 to 79, asked participants to wear a pedometer for a week to track their daily step counts.

Researchers from Denmark and Australia then checked seven years later to see if any of the participants had been diagnosed with dementia.

The results suggested that 9,800 steps were optimal for preventing dementia, apparently reducing the risk by 51 percent.

According to a recent study published in the British Journal of Sports Medicine, walking between 9,000 and 10,500 steps per day reduces the risk of heart disease by up to 21 percent and premature death by up to 10 percent, in people ” highly sedentary. adults.

Do you really need to take 10,000 steps?

Experts say 10,000 steps is far from a magic number for everyone.

Although touted as the ideal goal for staying fit and healthy, not everyone has time to take 10,000 steps every day, the equivalent of walking five miles.

Instead, experts say taking fewer steps at a faster pace could provide many of the same benefits.

‘There’s no need to take a set number of steps every day,’ London-based celebrity personal trainer Matt Roberts told MailOnline.

He explained that instead of 10,000 steps, moving every day for a shorter period of 40 minutes or more will improve your chances of burning fat.

However, it’s better to complete these steps all at once, during a “long, brisk walk,” rather than taking many small steps throughout the day, he explained.

“That would be about 3,000 to 5,000 steps of fairly brisk walking and your gains would be realized,” he said.

“The reason more steps might be necessary is that for most people, there is simply no fate when 3,000 to 5,000 steps are taken in a single period.

“Taking more steps throughout the day burns more calories, but there’s no guarantee that it burns fat if that’s your goal.”

Although staying fit and healthy has been touted as a daily goal, not everyone has time to take 10,000 steps, which is the equivalent of walking five miles.

Studies have also shown the health benefits of taking fewer but faster steps.

Researchers who found that 9,800 steps were optimal for preventing dementia also revealed that walking 6,300 steps, but at a fast pace (40 steps per minute), reduced the risk of developing dementia by 57%.

This suggests that fewer steps at a faster pace might be just as good, or even better.

Studies also show that you don’t need to count your steps if you exercise extra, even for less than half an hour a day.

A meta-analysis of 11,000 people published in 2023 found that those who exercised just 22 minutes a day had a 40% lower risk of death than those who didn’t exercise at all.

Moving more and not being sedentary could also be enough to have a significant impact on your health.

Ms Bottoms said: “In recent years, research has shown that prolonged periods of sitting can increase the risk of various health problems and that this can be reduced by simply moving more. »

“For some people, however, having a goal to achieve helps motivate them to get moving.

“I would focus more on breaking down sitting time and making sure you can take as many steps as possible and just be more active.”

She suggests aiming for around 7,000 steps per day and if you’re not achieving that, increase your step count by 1,000 per day to gradually get closer to your goal.