Facebook and Meta ran ads for an artificial intelligence app that used fake nude photos of an underage Jenna Ortega.

The ‘Wednesday’ star was attacked by the Perky AI app, which was promoted as a way to create sexually explicit images of anyone using artificial intelligence.

The software posted at least 11 ads on social media platforms last month, according to NBC. At least one showed a blurry image that appeared to show Ortega topless based on a photograph taken when she was 16.

The app, which costs $7.99 a week, showed users how to create fake nudes of real people using prompts such as “no clothes,” “latex costume” and “Batman underwear.”

A description of the app on the Apple Store describes how users can “enter a message to look and dress however they want.”

It is the latest controversy involving the distribution of celebrity deepfakes on the Internet. It comes months after AI-generated pornographic images of Taylor Swift went viral.

Facebook and Meta ran ads for an artificial intelligence app that used fake nude photos of a minor, Jenna Ortega.

The ‘Wednesday’ star was attacked by the Perky AI app, which was promoted as a way to create sexually explicit images of anyone using artificial intelligence.

The software ran at least 11 ads on Meta platforms last month before being removed from Facebook and Instagram, NBC reports. In the photo, Meta CEO Mark Zuckerberg.

The AI-powered app has posted more than 260 different ads on Meta since September, 30 of which were removed by the social media company for violating its terms.

One of Ortega’s ads, now 21, had more than 2,600 views, NBC reports. Meta and Apple removed the ads after the media outlet flagged them.

Meta earns 95 percent of its revenue from advertising and grossed more than $131 billion in 2023.

Perky AI listed its developer as RichAds, a Cyprus-based “self-service global advertising network” that creates push ads, according to its website.

In addition to Ortega, singer Sabrina Carpenter was also the subject of several advertisements.

Carpenter is currently on tour supporting Taylor Swift, another celebrity who has fallen victim to the disturbing deepfake trend.

Swift was left “furious” by the AI images circulating online and was considering legal action against the sick deepfake porn site that hosts them.

The singer was the latest target of the website, which violates state pornography laws and continues to outpace cybercrime squads.

One of Ortega’s ads, now 21, had more than 2,600 views before being removed.

Taylor Swift has been the target of AI-based pornography and is said to be considering legal action against the website that hosts it.

Singer Sabrina Carpenter appeared in explicit ads for the Perky AI app.

Dozens of graphic images of Swift were uploaded to Celeb Jihad, showing the singer in a series of sexual acts dressed in Kansas City Chief memorabilia and in the stadium.

The pornography was viewed 47 million times before being removed, although it is still accessible.

Experts have warned that the law is woefully behind on the issue of AI-generated images and that increasing numbers of women and girls could be targeted.

“At this point we are too little, too late,” said Mary Anne Franks, George Washington University Law School.

‘It won’t just be the 14-year-old girl or Taylor Swift. They will be the politicians. They will be world leaders. “There are going to be elections.”

The pernicious technology had already begun to infiltrate schools before the Swift scandal.

It recently emerged that a group of teenage girls at a New Jersey high school had been attacked when their male classmates began sharing naked photos of them in group chats.

On October 20, one of the boys in the group chat allegedly talked about it to one of his classmates, who took it to school administrators.

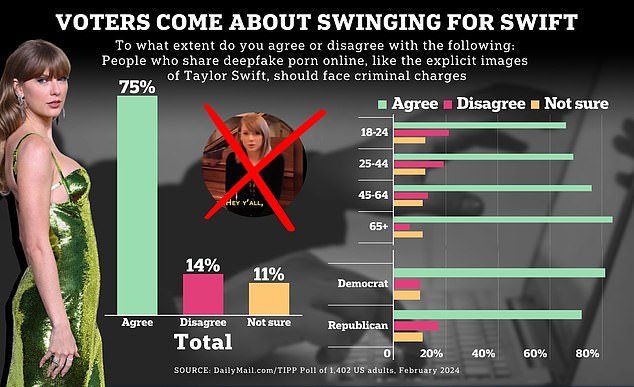

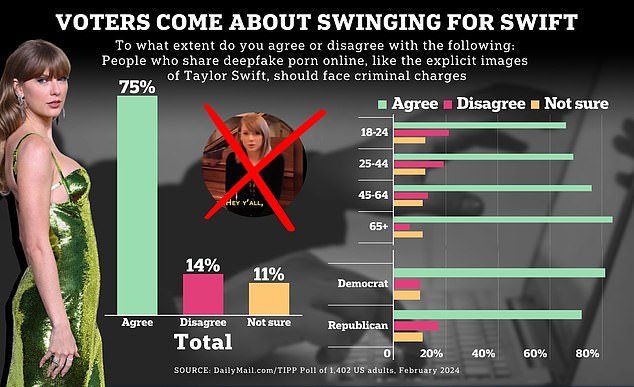

75 percent of people agree that people who share fake pornographic images online should face criminal charges.

Lawmakers proposed the Challenge Act that would allow people to sue those who created deepfake content of them.

But it wasn’t until fake photos of Swift went viral that lawmakers pushed for action.

U.S. senators introduced the Disrupting Explicit Falsified Images and Nonconsensual Editing Act of 2024 shortly after Swift became a victim of the technology.

“While the images may be fake, the harm to victims from the distribution of sexually explicit deepfakes is very real,” Senate Majority Leader Dick Durbin (D-Ill.) said last week.

‘Victims have lost their jobs and may suffer from ongoing depression or anxiety.

“By introducing this legislation, we are putting the power back in the hands of victims, cracking down on the distribution of ‘deepfake’ images and holding those responsible for the images accountable.”

A 2023 study found that in the last five years there has been a 550 percent increase in the creation of doctored images, with 95,820 deepfake videos posted online last year alone.

In a Dailymail.com/TIPP poll: About 75 percent of people agreed that people who share fake pornographic images online should face criminal charges.

Deepfake technology uses artificial intelligence to manipulate a person’s face or body, and there are currently no federal laws protecting people from sharing or creating such images.

“Meta strictly prohibits child nudity, content that sexualizes children, and services that offer AI-generated non-consensual nude images,” Meta spokesperson Ryan Daniels said in a statement to NBC.

DailyMail.com has contacted Meta and Perky AI for comment on Ortega’s announcements.