- Travis and Jason Kelce’s New Heights won Podcast of the Year at the iHeart Awards

- Couples were not in attendance, but sent a hilarious video acceptance speech

- DailyMail.com provides all the latest international sports news

<!–

<!–

<!–

<!–

<!–

<!–

Jason and Travis Kelce’s incredible year reached new heights Monday when the popular NFL duo won podcast of the year at the iHeart Radio Awards.

The brothers have enjoyed a wild couple of months, with Travis winning the Super Bowl and Jason retiring from football – while their podcast also shot to the top of the charts.

Now the Kelces have been awarded a trophy after their New Heights Show beat the likes of My Favorite Murder and SmartLess to win Podcast of the Year.

Neither brother attended the ceremony in Austin, Texas, but they did record a video message to the crowd, in which Travis joked: ‘Get the hell out of here… are people actually listening to this?’

Jason then went on to thank Taylor Swift’s fans for voting for them to win – amid his brother Travis’ high-profile relationship with the pop superstar.

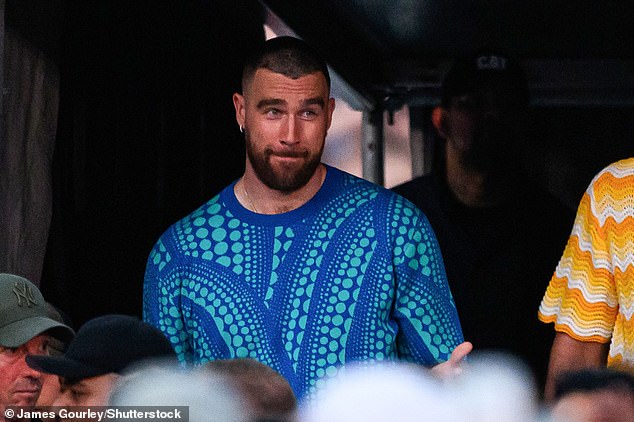

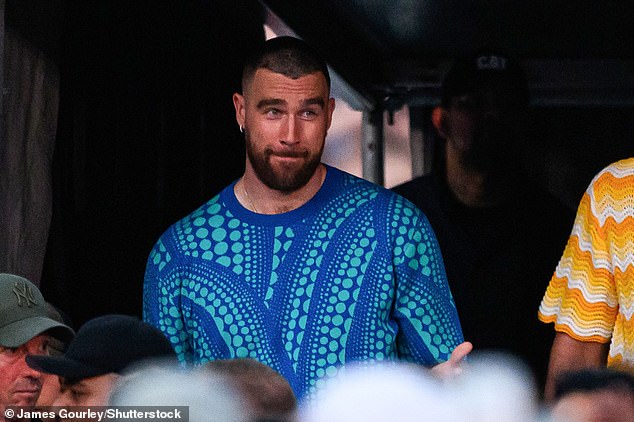

Travis (left) and Jason Kelce celebrate winning Podcast of the Year at the iHeart Radio Awards

They jokingly thank fans of Taylor Swift for voting them for the award in a pre-recorded speech

At the beginning of their acceptance speech, Jason said, “Right now we’re actually talking to the entire iHeart Radio Awards audience,” before Travis added, “Oh shit, we wish we could be there guys, but we’re honored to accept this award.’

The Kansas City Chiefs tight end then added: ‘Podcast of the year is big s***,’ before Jason continued: ‘It’s an incredible honor, especially for two jabronies like us.

‘Receiving an award like this is beyond humbling and we’d be remiss if we didn’t immediately thank all the 92 percenters, AKA Swifties, for voting for us.’

Taylor’s NFL boyfriend recently supported her on her Eras Tour international leg

Jason and Travis could strike a new New Heights deal worth $100 million, it has been claimed

Earlier in the night, the Kelce brothers had also won Best Overall Ensemble, but missed out on Best Sports Podcast to Shannon Sharpe’s Club Shay Shay.

Accepting his award on stage, Sharpe joked: ‘I would so love to win this award. No seriously.’

Although Kelces couldn’t be there in person, Travis is back on American soil after spending time with girlfriend Taylor Swift in both Australia and Singapore.

The pop sensation continues her sold-out Eras Tour, and Travis joined her for a handful of shows following the conclusion of the NFL season.