Like millions of people in America, artificial intelligence was something Dianne Balon read about in the news.

He didn’t know that technology would come to save his life.

Despite being a picture of health, an artificial intelligence blood test in 2022 revealed that one of the world’s deadliest cancers was silently forming in Ms Balon’s pancreas.

It caught the tumor in its earliest form, before it had a chance to grow and spread, which is when the vast majority of pancreatic cancers are detected, by which time it is too late.

The test results provided a “key piece of the puzzle.” The woman, now 62, underwent surgery to remove the lesion that would have developed into “full-blown pancreatic cancer,” according to her surgeon.

“I’m incredibly grateful,” she told DailyMail.com. “AI completely saved my life, I won the lottery.”

Dianne Balon, 62, underwent an AI-powered blood test that led her to discover she was on the path to “full-blown pancreatic cancer.”

The test is a periodic blood draw that includes a report on the levels of different molecules, metabolites, proteins and chemicals in the blood, as well as an indication of the levels of inflammation in the body.

She revealed that even her surgeon was caught off guard before the operation and told her: ‘Why are you here? We don’t normally see people this early in the process.

Ms. Balon’s cancer journey began in 2017 at a health conference, which she was attending due to her work as vice president of a Canadian health insurer.

The mother of two from Edmonton, Canada, watched a presentation from Molecular You, a Vancouver biotech startup that had just launched a new blood test.

It works by analyzing more than 250 biomarkers that even the most sophisticated blood tests available in most hospitals cannot detect.

An artificial intelligence program trained to look for subtle changes in immune markers and inflammation then analyzes the results in a database.

Being “very curious” about her personal health, she decided to give it a try.

He underwent annual blood tests worth $700 between 2018 and 2022.

In 2022, his results showed “very high” rates of inflammation in his body and changes in several metabolites and proteins.

Pancreatic cancer is one of the deadliest cancers; About 80 percent of diagnosed cases are already at stage three or four at the time of initial evaluation, meaning it is incurable and too late for surgery.

It was a complete shock: Mrs Balon was thin, asymptomatic and ate healthily, so I had no reason to suspect she was ill.

‘While I didn’t understand what each and every one of those [biomarkers] It was that I realized there were enough differences and I needed to ask more questions.

He reported the results to his doctor, who performed blood tests using a traditional test, which showed he was “in the norm” for inflammation.

But she wouldn’t take no for an answer and convinced her doctor to refer her for a biopsy and scans, which found a cancerous lesion on her pancreas.

A biopsy soon after confirmed that something was wrong and that her carcinoembryonic antigen (CEA) levels were through the roof.

Mrs. Balon can now live a long, healthy life and watch her two children and four grandchildren grow up.

CEA is a protein normally found in the tissue of a developing baby in the womb. In adults, an abnormal CEA level may be a sign of cancer.

“Their levels are supposed to be zero or very low, and mine were tens of thousands higher than they should have been, which was a huge red light and a big surprise,” he said.

In December she had surgery and the surgeon told her: ‘I put all this together. This is the trend, you are on the path to full-blown pancreatic cancer.”

Ms Balon underwent a distal pancreatectomy and splenectomy, where surgeons removed her spleen and part of her pancreas.

“I know I’m incredibly lucky… most people aren’t that lucky,” Ms Balon said.

Pancreatic cancer affects approximately 66,000 Americans each year and kills about 52,000 people, making it one of the deadliest types of disease.

It often grows silently and produces symptoms that are dismissed by other things.

About 80 percent of diagnosed cases are already at stage three or four at the time of initial evaluation, meaning it is incurable and too late for surgery.

The Molecular You test is currently only available in Canada and through a small number of select clinics in the US, but the company hopes to offer testing more widely later in 2024.

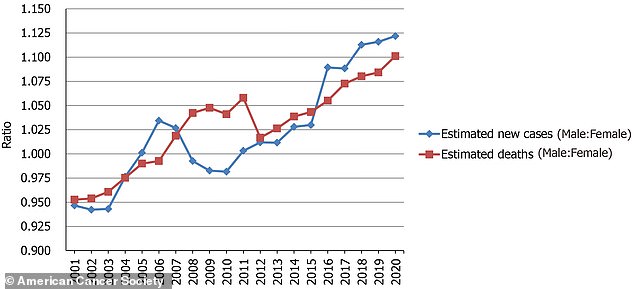

Balon said the test would be a “game changer” in having “another tool in the toolbox” to detect cancer early, particularly in young people, where cases are rising at worrying rates.

Currently there is no single diagnostic test that can tell if you have pancreatic cancer.

No screening: Diagnosis requires multiple imaging scans, blood tests, and biopsies, which are usually only done if the patient is symptomatic.