Move over the tradwives, because there’s a new type of creator taking social media by storm.

In recent years, married mothers who post videos of themselves lovingly cooking from scratch and romanticizing their everyday lives have become TikTok heavyweights, with millions of followers.

Riding on this success, stay-at-home boyfriends are becoming an increasingly hot property on TikTok, with their fans saying they are the reason they will “never lower their standards” towards men.

William Conrad, 25, from Canada, is at the forefront of this social media movement and has racked up more than 10 million likes on his TikTok videos.

The self-described ‘stay-at-home boyfriend’ has been dating influencer Levi Coralynn for the past three years.

Pictured: William Conrad, 25, a self-described “stay-at-home boyfriend,” posing with some of his homemade choux rolls.

While she records her content for her 1.7 million followers every day, William fills his time cooking, cleaning, and generally taking care of their shared home.

speaking to The timesShe explained: ‘Levi is the breadwinner and I clean the kitchen.

“I feel like I’m contributing and that my role helps support what she’s accomplishing.”

Elsewhere in the interview, William revealed that it was his girlfriend’s idea to start sharing videos of his home cooking online.

At the beginning of each video, the star addresses the viewer as if he were Levi and speaks to the camera as he would to his partner.

in a video uploaded last week, William says: ‘Good morning, I hope you slept well. Let’s prepare your breakfast.

In the 80-second video, William films himself preparing a homemade potato rosti topped with fresh cream, smoked salmon, poached eggs and hollandaise sauce, which took him two tries to perfect.

He then ends the video by placing the food on a tray along with a chopped kiwi, a cup of coffee and a vase of flowers to give to his girlfriend.

Pictured: William seen cleaning the house he shares with his influencer girlfriend Levi Coralynn in Canada.

Pictured: TikTok star Ricky Lee with his daughter Laguna. The father’s videos on how to hold a baby have racked up millions of views

“This is not breakfast,” one fan responded. “It’s a proposal”.

“I need this man in my life,” gushed another.

Referencing a famous TikTok trader, a third said: “He’s our Nara Smith.”

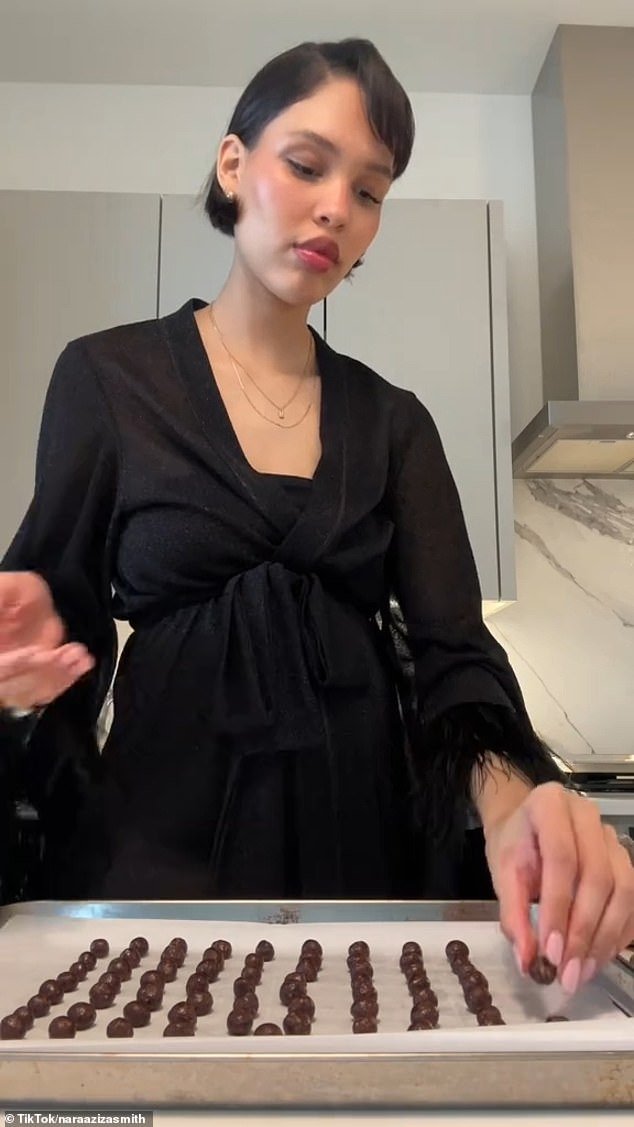

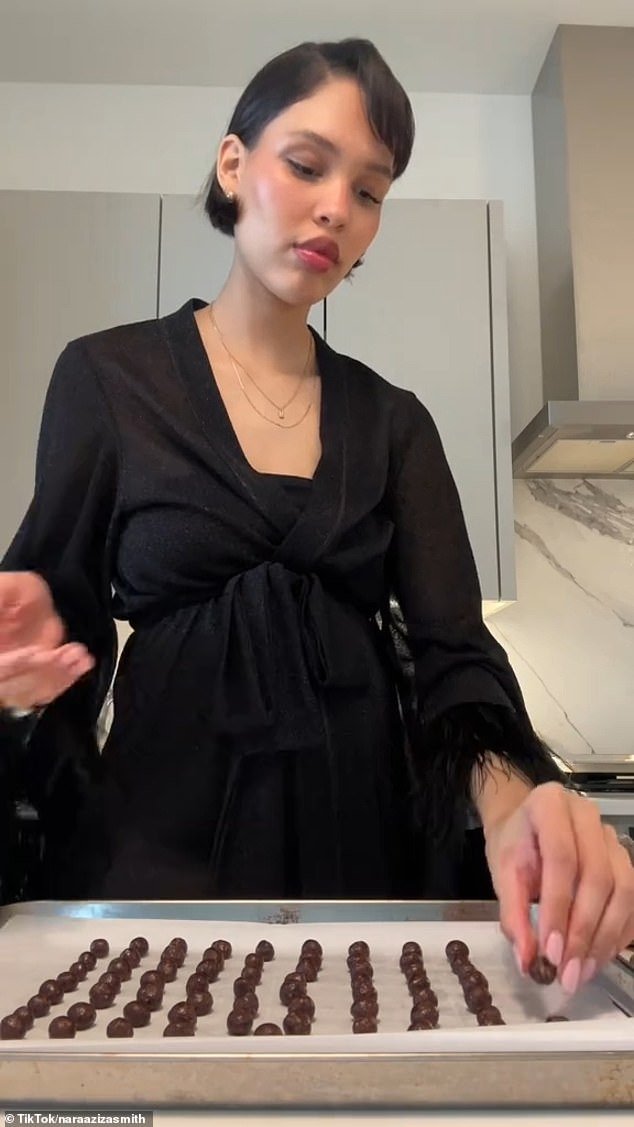

Last month, the glamorous tradwife sparked debate on TikTok when she filmed herself making cereal from scratch for her young children.

“I asked my little kids what they wanted for breakfast and they both said cereal, so it was cereal and we started right away,” the Mormon housewife explained in the opening of her now-viral video.

But instead of opening her cabinet and grabbing a sugary cereal, Nara proceeded to make her own and walked her followers through the painstaking process while wearing a glamorous black silk robe with fluffy cuffs.

At the beginning of each video, the star addresses the viewer as if he were Levi and speaks to the camera as he would to his partner.

In the photo: William Conrad and his girlfriend of three years Levi Coralynn pose in the garden of their home in Canada.

When asked if his viewers have different expectations of him, William said, “Maybe I represent the pendulum swinging the other way, where more women go out to work while their husbands stay at home.”

In a video posted to her TikTok yesterday, Levi filmed her boyfriend cooking for her and wrote: ‘Be patient. Don’t settle.

William’s rise to fame comes as young father Ricky Bee has amassed 170,000 followers for his videos about raising his baby Laguna.

In January, the father raised a video telling her followers the correct ways to hold a three-month-old baby, which has amassed over 22 million views.

He joked: ‘Do you have one of these things? This is how you hold a baby.’

The doting father then proceeded to explain to his followers the techniques he has dubbed the “floating chair” and the “arm bar.”

On January 26, Nara, who is South African and German, shared a video creating both treats in a glamorous black robe with faux fur cuffs and the internet went wild.

‘I didn’t know making homemade cereal was so easy!’ one follower was happy, but others joked about how intense the cooking session seemed.

In a video posted to her TikTok yesterday, Levi filmed her boyfriend cooking for her and wrote: ‘Be patient. Don’t settle’

The one-minute video sent social media users into a frenzy, with many taking to the comments to lust after Ricky.

One responded: ‘I want to be her!’

Another added: ‘He treats her like a Koala bear, super cute!’

Meanwhile, a third said: ‘When a dad is just a dad! We love that!’

Ricky told The Times: “I think for dads, (the expectation) is nothing and that’s why some think I’m amazing for doing something.”

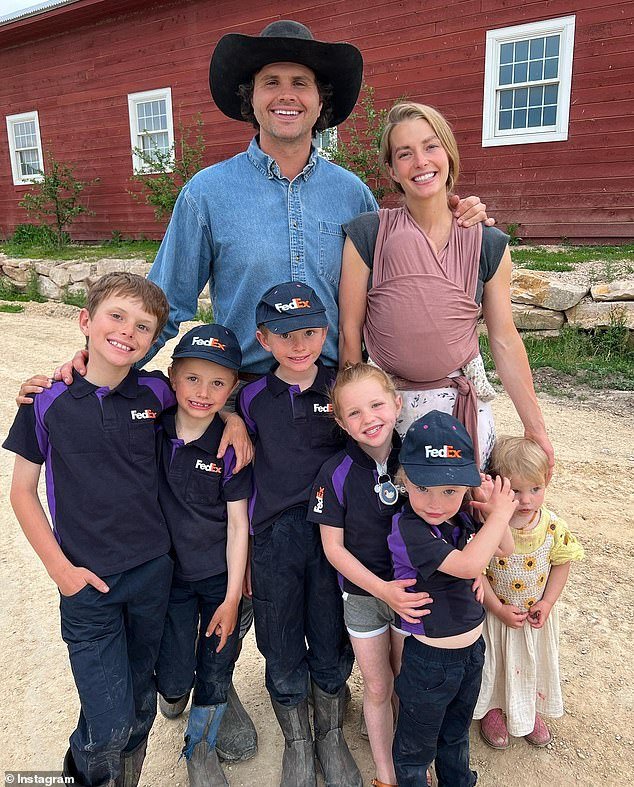

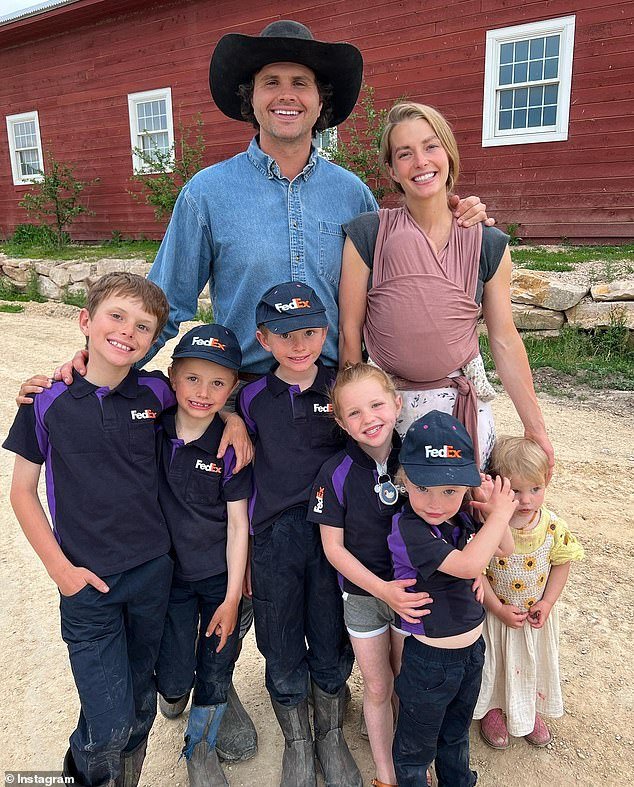

Last month, one of TikTok’s most famous traditional wives sparked debate when she competed in Miss World just two weeks after giving birth.

Hannah Neeleman, 33, ditched her postpartum diapers for a jaw-dropping dress and swimsuit to head to Las Vegas to compete for the crown last week.

She showed off her stunning looks after competing on Mrs. World, just two weeks after giving birth to her eighth child.

Hannah Neeleman33, ditched her postpartum diapers for a jaw-dropping dress and swimsuit to head to Sin City and compete for the crown, where she wowed the judges with her stunning looks.

The Utah-based influencer, who has become a social media star by regularly documenting her stay-at-home lifestyle on her family’s farm, shared a behind-the-scenes look at her beauty pageant prep. , revealing how she glammed up for the stage while simultaneously breastfeeding. hers newborn hers.

Having left her seven other children at home on the farm, Hannah took her two-week-old baby to represent the United States in the international Mrs. World competition, in which married women from around the world fight for the crown.

Hannah, a former Julliard-trained dancer, has traded in baggy clothes for dresses and is ready for what it means to compete with her newborn, Flora Jo.

Speaking to The New York Times, she noted that although she was “still bleeding a little,” she knew she was ready to show off her beauty on the runway.

The Utah-based influencer, who has become a social media star by showing off her farming lifestyle online, looked glamorous with her newborn as she prepared to strut down the runway.

She told the outlet: “A lot of us have kids, and I don’t think there’s any shame in showing that I just had a baby.”

“I mean, I’m not going to have a perfectly flat stomach.”

Hannah documented her pageant experience on social media giving her followers a behind-the-scenes look at what it’s really like to compete with a newborn.

Behind the glitz and glamor of the gorgeous dresses and shimmering makeup is a 33-year-old woman trying to balance the duties of a mother and a pageant queen.

In one photo, Hannah can be seen sitting behind a ring light nursing her baby while her makeup artists and hairstylists hover over her.

The mother of eight told the New York Times: “She’s inhaled a lot of hairspray, but other than that, she’s stayed safe.”