The mother of Princess Beatrice’s stepson has said she is glad her son Wolfie has “two parents trying to help him” navigate his childhood.

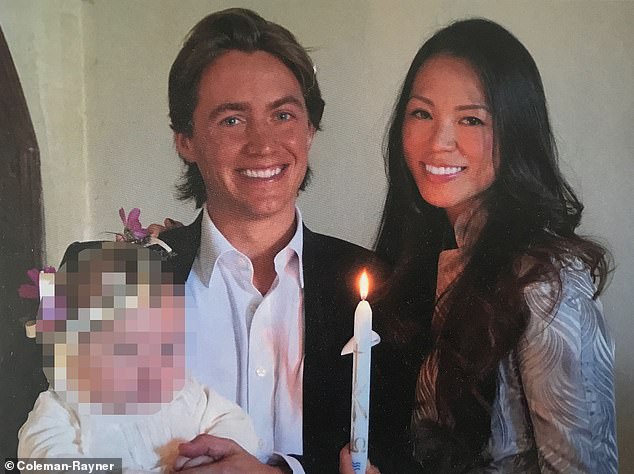

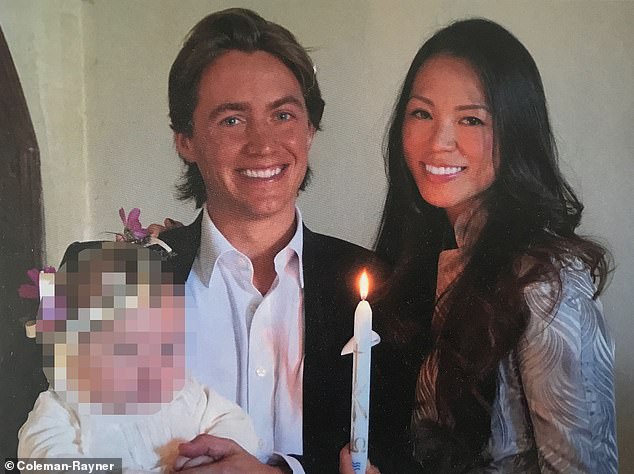

Dara Huang, 41, who was in a relationship with Edoardo Mapelli Mozzi between 2015 and 2018, shares seven-year-old Wolfie with her ex, who is now married to Princess Beatrice.

He and Beatrice are parents to two-year-old Sienna Elizabeth Mapelli Mozzi and co-parent Chrsitopher ‘Wolfie’ Mapelli Mozzi with Dara and his current partner.

The architect, who is from Florida but lives in Chelsea, said she is glad her seven-year-old son has “two parents trying to help him on both sides.”

She said harper’s bazaar: ‘Wolfie has had two sets of parents who tried to help him on both sides, and I just think, “The more, the better.”

The mother of Princess Beatrice’s stepson has said she is glad her son Wolfie has “two parents trying to help him” navigate his childhood.

Dara Huang, 41, who was in a relationship with Edoardo Mapelli Mozzi between 2015 and 2018, shares seven-year-old Wolfie with her ex, who is now married to Princess Beatrice.

“I feel lucky to have such positive people around him who really embrace him, because it didn’t have to be so easy.”

Dara added: ‘It all depends on your point of view. I don’t understand people who are divorced and then have their children as collateral; that doesn’t make any sense.

“It’s about creating a happy home and lifestyle.”

Her conversation with the magazine comes after she revealed that 2023 was a “a really challenging year” for her in a cryptic Instagram post in January.

Dara shared a candid post with her 62,000 Instagram followers revealing that it was the year with the “most changes.”

Alongside the snaps were several things he had done throughout the year, including trips to Miami, London, Paris, Venice, Ibiza, Frankfurt, New York and Greece.

The architect did not reveal what her challenges were, but said she felt 2024 would be better.

“2023 was actually a really challenging year for me,” he said. ‘I won’t go into details, but it is definitely the year with the most changes.

The architect, who is from Florida but lives in Chelsea, said she is glad her seven-year-old son has “two parents trying to help him on both sides.”

She told Harper’s Bazaar: “Wolfie has had two sets of parents who tried to help him on both sides, and I just think, ‘The more the merrier.’

Dara’s son Wolfie made his first appearance at a royal engagement in December, at Kate Middleton’s Christmas carol concert.

“I’m not sure why, but I have a feeling that 2024 will be our year and a lot of positivity will come out of it. I’m very excited and ready!” she added.

‘Some ‘workidays’ which is when I have to travel for work, and I turn it into ‘vacations’ too: Miami, London, Paris, Venice, Ibiza, Frankfurt, NYC, Greece.’

In another post, he looked back on what he did in 2023, including filming his first TV show, moving, and designing hotels in Paris and Bali.

He also proudly shared how he completed large projects in Miami, the Hamptons, and New York, as well as horseback riding in the ocean.

Dara’s son Wolfie made his first appearance at a royal engagement in December, at Kate Middleton’s Christmas carol concert.

He and Beatrice are parents to two-year-old Sienna Elizabeth Mapelli Mozzi and co-parent Chrsitopher ‘Wolfie’ Mapelli Mozzi with Dara and his current partner.

Beatrice previously described Wolfie as a “bonus boy”, while Sarah Ferguson refers to him as her grandson.

Dara was engaged to interior designer Edoardo, 39, before marrying the king’s niece Princess Beatrice, 34.

Wolfie then swapped real-life princesses for fairy tale princesses on a Disney cruise with his mother, architect Dara Huang, Richard Eden reports.

The two then joined Dara’s family in Florida, where she grew up.

Last Christmas, Wolfie stayed in Norfolk with his stepmother Bea, his father, property developer Edoardo Mapelli Mozzi and his two-year-old half-sister Sienna.

Wolfie had previously stayed out of the public eye, but proudly walked hand in hand with Beatrice to Westminster Abbey last month.

Beatrice previously described Wolfie as a “bonus boy”, while Sarah Ferguson refers to him as her grandson.

Dara was engaged to interior designer Edoardo, 40, before marrying the king’s niece Princess Beatrice, 35.

He was born and raised in the United States, where his maternal grandfather had emigrated from Taiwan. Last year she was granted British citizenship.

The Harvard-educated architect who grew up in Florida, He has a bachelor’s degree from the University of Florida and transferred to the Ivy League school to earn his Master of Architecture.