Researchers have criticized US officials for not implementing stricter rules on artificial intelligence before pop star Taylor Swift fell victim to deepfakes.

Images showing the fourth time The Grammy winner was seen in a series of sexual acts dressed in Kansas City Chief memorabilia and in the stadium, and sharing pornography. 47 million times online before being removed.

A professor at George Washington University Law School said that if proper legislation had been “passed years ago,” Swift and others would not have experienced such abuses.

“At this point we are too little, too late,” said Mary Anne Franks.

‘It won’t just be the 14-year-old girl or Taylor Swift. They will be the politicians. They will be world leaders. “There are going to be elections.”

Sexually explicit and non-consensual fake images of Taylor Swift circulated on social media and were viewed 47 million times before being removed.

A group of teenagers were targeted with deepfake images at a New Jersey high school when their classmates began sharing naked photos of them in group chats.

On October 20, one of the boys in the group chat allegedly talked about it to one of his classmates, who took it to school administrators.

“My daughter texted me, ‘Mom, naked pictures of me are being distributed.’ That’s it. Heading to the principal’s office,” said one mother CBS News.

She added that her daughter, 14, “started crying and then she was walking down the halls and saw other girls at Westfield High School crying.”

But it wasn’t until fake photos of Taylor Swift went viral that lawmakers pushed for action.

X closed the account that originally posted graphic deepfake images of Swift for violating the platform’s policy, but it was too late: they have already been reposted 24,000 times.

A medium 404 report revealed that the images could have originated from a Telegram group after users allegedly joked about how images of Swift went viral.

x saying Its teams were taking “appropriate action” against the accounts that posted the deepfakes and said they were monitoring the situation and removing the images.

Last week, U.S. senators introduced the Disrupting Explicit Falsified Images and Nonconsensual Editing Act of 2024 (DEFIANCE Act) shortly after Swift became a victim of the technology.

“While the images may be fake, the harm to victims from the distribution of sexually explicit deepfakes is very real,” Senate Majority Leader Dick Durbin (D-Ill.) said last week.

‘Victims have lost their jobs and may suffer from ongoing depression or anxiety.

“By introducing this legislation, we are putting the power back in the hands of victims, cracking down on the distribution of ‘deepfake’ images and holding those responsible for the images accountable.”

Lawmakers proposed the Challenge Act that would allow people to sue those who created deepfake content of them.

Politicians introduced the Intimate Images Deepfake Prevention Act last year, which would make it illegal to share non-consensual deepfake pornography, but it has not yet passed.

“If legislation had been passed years ago, when advocates were saying this is what would happen with this type of technology, maybe we wouldn’t be in this position,” said Franks, a professor at George Washington University Law School and president of Cyber Civil. Human Rights Initiative, said American scientist.

Franks said lawmakers are doing too little, too late.

“We can still try to mitigate the disaster that is emerging,” he explained.

Women are “canaries in the coal mine,” Franks said, talking about how AI disproportionately affects the female population.

She added that eventually “it won’t just be the 14-year-old girl or Taylor Swift.” They will be the politicians. They will be world leaders. “There are going to be elections.”

A 2023 study found that in the last five years there has been a 550 percent increase in the creation of doctored images, with 95,820 deepfake videos posted online last year alone.

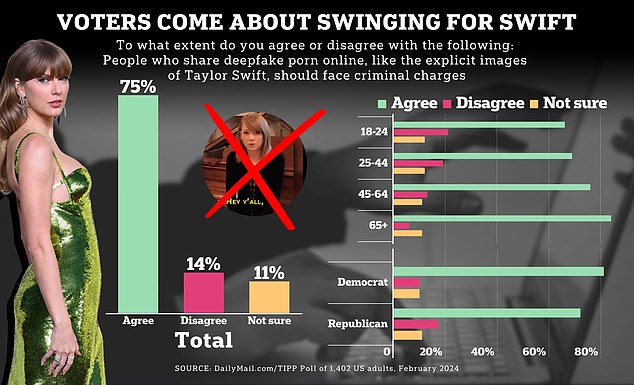

In a Dailymail.com/TIPP poll: 75 percent of people agreed that people who share deepfake pornographic images online should face criminal charges.

Deepfake technology uses artificial intelligence to manipulate a person’s face or body, and there are currently no federal laws to protect people from sharing or creating such images.

Rep. Joseph Morelle (D-NY), who introduced the Intimate Images Deepfake Prevention Act, called on other lawmakers to step up and take urgent action against the rise of deepfake images and videos.

The images and videos “can cause irrevocable emotional, financial and reputational harm,” Morelle said, adding: “And, unfortunately, women are disproportionately affected.”

75 percent of people agree that people who share fake pornographic images online should face criminal charges.

However, despite everything they say, there are still no guardrails in place to protect Americans from falling victim to non-consensual deepfake images or videos.

“It is clear that AI technology is advancing faster than the necessary barriers,” said Congressman Tom Kean, Jr., who proposed the AI Labeling Act in November of last year.

The Act would require AI companies to add labels to any AI-generated content and force them to take responsible steps to prevent the publication of non-consensual content.

“Whether the victim is Taylor Swift or any young person in our country, we need to put safeguards in place to combat this alarming trend,” Kean said.

However, there is one big catch in all the legislative uproar: who to charge with a crime once a law criminalizing deepfakes is passed.

It is highly unlikely that the guilty person will come forward and identify themselves, and forensic studies cannot always identify and prove what software created the content, according to Amir Ghavi, senior advisor on artificial intelligence at law firm Fried Frank.

And even if law enforcement could determine where the content originated, they could be prohibited from taking action by Section 230, which says websites are not responsible for what users post.

Still, potential barriers aren’t stopping politicians in the wake of Swift’s confrontation with sexually explicit deepfake content.

“No one, whether celebrities or ordinary Americans, should be exposed to AI-based pornography,” said Sen. Josh Hawley (R-Mo.).

Speaking about the Defiance Law, he said: ‘Innocent people have the right to defend their reputations and hold perpetrators to account in court. “This bill will make that a reality.”