Sam Smith has been announced to perform at this year’s Proms and the BBC has insisted his show will be “completely appropriate for the festival”.

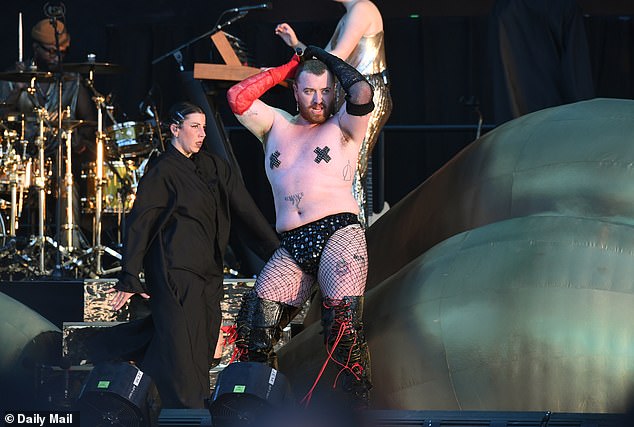

The singer, 31, has performed a series of controversial shows in recent years and is often seen wearing risqué and sexualised outfits on stage.

Sam announced that they were non-binary in 2019 and asked people to use the pronoun they when referring to them.

This year they will headline a BBC graduation concert on August 2 in an extension of the pop programme.

It comes after Sam’s daring performance at the 2023 BRITs sparked more than 100 Ofcom complaints for ‘devil worship’ and a passionate kiss.

Sam Smith has been announced to perform at this year’s Proms and the BBC has insisted his show will be “completely appropriate for the festival”.

The singer, 31, has performed a series of controversial shows in recent years and is often seen wearing risqué and sexualised outfits on stage.

Sam performed the song dressed as the devil, with horns sprouting from his hat, a similar look that drew the ire of viewers at the Grammys the previous week.

Her Gloria tour last year also caught the attention of some fans who complained that it was not suitable for a younger audience.

But Sam Jackson, head of Radio 3, has insisted that Sam’s show for the Proms would be appropriate, saying: “I think what you will see is a performance that is completely appropriate for the Proms and completely appropriate for the audience present.”

‘The focus will be on Sam Smith and his music and will focus on the unique orchestral arrangement, and the fact that this will be the only opportunity to see Sam Smith in the UK this year.

‘This is very much a prom, it’s not Sam Smith at the Royal Albert Hall. The look will be completely appropriate for that festival.”

For his show, Sam will sing a performance of his 2014 Grammy-winning debut album, In the Lonely Hour, with the BBC Concert Orchestra to mark the record’s 10th anniversary.

Elsewhere at the festival, Florence Welch of indie-rock band Florence + The Machine will perform their 2009 Brit Award-winning album Lungs.

He will perform alongside Grammy-winning composer Jules Buckley and his orchestra when he takes the stage on September 11.

It comes after Sam showed off her novel sense of style once again earlier this week, when she stepped out in New York in a flowy black cape – but left fans divided over the look.

They will headline a BBC Prom concert this year on August 2 in an extension of the pop programme.

Her Gloria tour last year surprised some fans who complained that it was not suitable for a younger audience.

But Sam Jackson, the head of Radio 3, has insisted that Sam’s show for the Proms would be appropriate, saying: “I think what you will see is a performance that is completely appropriate for the Proms and completely appropriate for the audience present.” .

Sam was stopped on the street by popular TikToker, The People Gallery, to check the fit of his latest eye-catching outfit.

The account, run by Maurice Kamara, has gained more than a million fans for sharing videos asking passers-by and celebrities about their outfits, chatting with everyone from Kim Kardashian to Willem Dafoe.

Sam appeared in the last clip, wearing a peculiar all-black suit.

They explained that her high-necked cape was by Rick Owens, paired with sky-high platform boots and the celebrity’s favorite bag, the Prada Cleo, which retails for a staggering £2,400.

The hitmaker said his favorite designers at the time were Rick Owens and Vivienne Westwood, admitting: “I never thought Vivienne Westwood clothes were for me and recently I can’t stop wearing Westwood.” I love it!’

When asked for her style advice, Sam said: “Every day starts anew.” That’s what I do personally.’

They also added: ‘My favorite food is anchovies with burrata and a vodka martini.’

Although Maurice gushed that Sam “looked amazing,” fans were less convinced by the avant-garde look.

Several took advantage of the comments on TikTok and

They joked: ‘When you leave the hair salon still wearing the cape; It looks like the cape my hairdresser puts on me to style my hair; Was the [sic] Right in the middle of a haircut or something?

‘Being prepared for a haircut anytime, anywhere is crazy; He left the cape on from the haircut; I’m pretty sure it’s one of those things they give you when you go to the barber.

‘at the service of Professor Snape ELEAGANZA!!; He is [sic] dressed as whoopi Goldberg in a sister act; The barbershop forgot to get her bib number back.

‘It looks like Sam Smith has just left the hair salon; The barbers called and said they wanted his cape back; There’s a barber somewhere who says, “You’ll never guess who pinched my cape!”

But several other fashion fans approved of the experimental style and attacked those who mocked Sam.

They wrote: ‘If I saw Sam Smith on the street sporting this stylish barber’s cape, I’d stop them too for the fashion details; This is what happens when you are so happy with life that you can express yourself freely.”

“It’s crazy how someone can have incredible talent, seem really nice and kind, but receive a bit of hate simply for wearing something they’re happy in; I love the flowy nature of this for them because it just gently hints at their curves.” below.

For his show, Sam will sing a performance of his 2014 Grammy-winning debut album, In the Lonely Hour, with the BBC Concert Orchestra to mark the record’s 10th anniversary.

Sam went viral online for her red carpet appearance at last year’s BRIT Awards, which consisted of a rubber bodysuit with dramatically flared arms and legs.

Sam has worn many viral outfits over the past few years and hasn’t been afraid to push the boundaries when it comes to fashion.

After coming out as non-binary, they began playing with rigid gender norms in clothing, opting for more androgynous looks.

The five-time Grammy winner has also been dressing more daringly and opting for avant-garde looks with outlandish shapes.

Sam went viral online for her red carpet appearance at last year’s BRIT Awards, which consisted of a rubber bodysuit with dramatically flared arms and legs.