- Those born after 1965 are more likely to have damaged tissues and cells

- Accelerated aging resulted in a 17 percent increased risk of any solid tumor cancer

- READ MORE: Doctors Mistook My Colon Cancer Symptoms For ‘Exhaustion’

<!–

<!–

<!– <!–

<!–

<!–

<!–

It’s one of the most baffling medical mysteries of our time: Why are so many young, otherwise healthy people diagnosed with cancer?

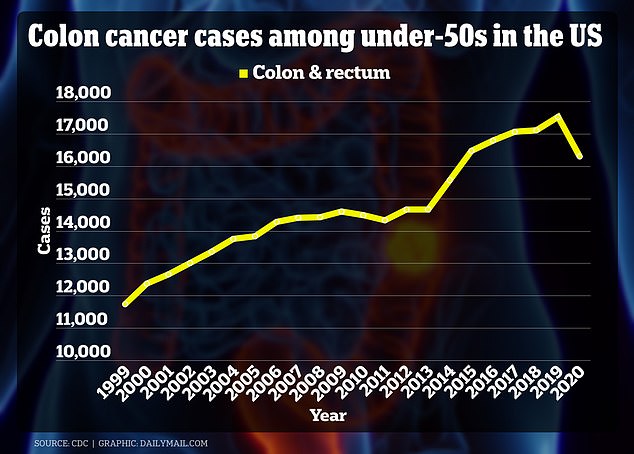

Cases of the disease have soared by 30 percent in people under 50 in the past 20 years, with high-profile patients such as Princess Kate Middleton, 42.

Now, American researchers have found a possible explanation.

A new study by experts at Washington University in St Louis found that generations with higher rates of cancer have cells and tissues in their bodies that are older than their age.

In other words, people born after 1965 (age 59 or younger) may be biologically older than their chronological age.

Cells are the center of all bodily functions. And as they age, their ability to repair and multiply is affected, creating a host of knock-on effects.

The graph above shows that cases of colon cancer among those under 50 years of age increased by more than 5,500 in 20 years. There is a drop in 2020 because the Covid pandemic caused fewer people to show up for screening.

Those with above-average accelerated aging had a 17 percent higher risk of developing any type of solid tumor cancer, including lung, gastrointestinal and uterine cancer.

Faster aging may be due to more stressful lifestyles and poorer mental health, obesity, sedentary lifestyles and junk food consumption.

For the study, researchers tracked data from nearly 150,000 people in the UK Biomedical Data Biobank.

They examined nine blood markers from blood tests to determine each person’s biological age – the age of a person’s cells and tissues.

The markers included albumin, a protein produced by the liver and important in preventing fluid from leaking from blood vessels, which reduces with age, and the average size of red blood cells, which increases with age.

When blood cells are larger, they have less ability to divide and multiply.

These were entered into an algorithm called PhenoAge, which generated a biological age for each person.

The researchers then compared this to the participants’ actual ages and looked at cancer registries to see how many had been diagnosed with early cancers, defined as cancer before age 55.

Almost 3,200 early cancers were diagnosed.

People born in 1965 or later were 17 percent more likely to show accelerated aging than people born between 1950 and 1954.

Ruiyi Tian, a graduate student at the University of Washington and first author of the study, said: “Unlike chronological age, biological age can be influenced by factors such as diet, physical activity, mental health, and environmental stressors.

“Accumulating evidence suggests that younger generations may be aging more rapidly than expected, likely due to earlier exposure to various risk factors and environmental insults.”

People who scored highest for fastest aging had twice the risk of early-onset lung cancer compared to people with the lowest levels of fastest aging.

They also had a 60 percent increased risk of stomach tumor and an 80 percent increased risk of uterine cancer.

Lifestyle factors such as smoking or vaping are known to increase damage to cells, and therefore biological age, due to the impact on blood vessels and blood pressure.

Not getting enough sleep can also increase biological age, as can being overweight and obese.

All of these factors have also been presented as factors that contribute to the increase in cancer cases in those under 50 years of age.