Russia and China must ensure that only humans, and never artificial intelligence, have control of nuclear weapons to avoid a possible apocalyptic scenario, a senior US official said.

Washington, London and Paris have agreed to maintain full human control over nuclear weapons, said State Department arms control official Paul Dean, as a safeguard to prevent any technological failure from plunging humanity into a devastating conflict.

Dean, principal deputy assistant secretary of the Office of Arms Control, Deterrence and Stability, yesterday urged Moscow and Beijing to do the same.

“We think it’s an extremely important standard of responsible behavior and we think it’s something that would be very welcome in a P5 context,” he said, referring to the five permanent members of the United Nations Security Council.

This comes as regulators have warned that AI is facing its “Oppenheimer moment” and are calling on governments to develop laws restricting its application to military technology before it is too late.

The alarming statement, referring to J. Robert Oppenheimer, who helped invent the atomic bomb in 1945 before advocating for controls on the proliferation of nuclear weapons, was made at a conference in Vienna on Monday, where civil, military and technology officials from more than 100 countries met to discuss the prospect of militarized artificial intelligence systems.

Hwasong-18 ICBM launched from undisclosed location in North Korea

Washington, London and Paris have agreed to maintain full human control over nuclear weapons, State Department arms control official Paul Dean said, urging Russia and China to do the same (pictured launch of the Sarmat intercontinental ballistic missile)

A Minuteman III ICBM in a silo at an undisclosed location in the US.

Although the integration of AI into military hardware is increasing at a rapid pace, the technology is still in its nascent stages.

But so far, there is no international treaty that prohibits or limits the development of lethal autonomous weapons systems (LAWS).

“This is the Oppenheimer Moment of our generation,” said Austrian Foreign Minister Alexander Schallenberg. “Now is the time to agree international rules and norms.”

During his opening remarks at the Vienna Conference on Autonomous Weapons Systems, Schallenberg described AI as the most significant advance in warfare since the invention of gunpowder more than a millennium ago.

The only difference is that AI is even more dangerous, he continued.

“Let’s at least make sure that the deepest and most far-reaching decision – who lives and who dies – is left in the hands of humans and not machines,” Schallenberg said.

The Austrian minister stated that the world needs to “ensure human control”, with the worrying trend of military AI software replacing humans in the Decision-making process.

“The world is approaching a tipping point for acting on concerns about autonomous weapons systems, and support for negotiations is reaching unprecedented levels,” said Steve Goose, arms campaigns director at Human Rights Watch.

“The adoption of a robust international treaty on autonomous weapons systems could not be more necessary or urgent.”

There are already examples of AI used in a military context with lethal effects.

Earlier this year, a report by magazine +972 cited six Israeli intelligence officers who admitted to using an AI called ‘Lavender’ to classify up to 37,000 Palestinians as suspected militants, marking these people and their homes as acceptable targets for airstrikes.

Lavender was trained with data from Israeli intelligence’s decades-long surveillance of Palestinian populations, using the fingerprints of known militants as a model for what signal to look for in the noise, according to the report.

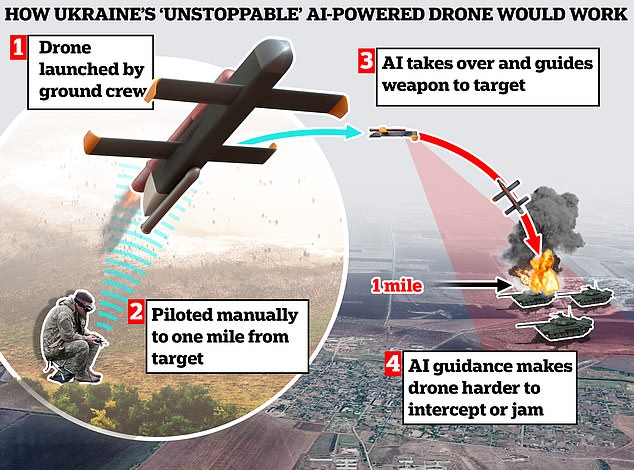

Meanwhile, Ukraine is developing artificially intelligent drones that could locate Russian targets from further away and be more resistant to electronic countermeasures in an effort to boost its military capabilities as the war continues.

Deputy Defense Minister Kateryna Chernohorenko said kyiv is developing a new system that could autonomously discern, hunt and attack its targets from afar.

This would make drones more difficult to shoot down or jam, he said, and reduce the threat of retaliatory attacks for drone pilots.

To date, there is no international treaty that prohibits or limits the development of lethal autonomous weapons systems (LAWS).

Civilian, military and technology leaders from more than 100 countries met in Vienna on Monday to discuss regulatory and legislative approaches to autonomous weapons systems and military AI.

A pilot practices with a drone at a training ground in the kyiv region on February 29, 2024, amid the Russian invasion of Ukraine.

‘Our drones should be more effective and guided to the target without any operator.

‘It should be based on visual navigation. We also call it ‘last mile targeting,’ targeting based on the image,” he said. The Telegraph.

Monday’s LAWS conference in Vienna came as the Biden administration attempts to deepen separate discussions with China over nuclear weapons policy and the growth of artificial intelligence.

The spread of AI technology emerged during wide-ranging talks between US Secretary of State Antony Blinken and Chinese Foreign Minister Wang Yi in Beijing on April 26.

The two sides agreed to hold their first bilateral talks on artificial intelligence in the coming weeks, Blinken said, adding that they would share views on how best to manage risks and security related to the technology.

As part of the normalization of military communications, U.S. and Chinese officials resumed discussions on nuclear weapons in January, but formal arms control negotiations are not expected any time soon.

China, which is expanding its nuclear weapons capabilities, urged in February that the largest nuclear powers first negotiate a no-first-use treaty among themselves.