Lawmakers and scientists have warned that ChatGPT could help anyone develop deadly biological weapons that would wreak havoc on the world.

While studies have suggested it’s possible, new research from chatbot creator OpenAI claims that GPT-4, the most recent version, provides at most a slight improvement in the accuracy of biothreat creation.

OpenAI conducted a study of 100 human participants who were separated into groups: one used AI to craft a bio-attack and the other just the Internet.

The study found that “GPT-4 can increase experts’ ability to access biological threat information, particularly for task accuracy and completeness,” according to the OpenAI report.

The results showed that the LLM group was able to obtain more information about biological weapons than the Internet group for ideation and acquisition, but more information is needed to accurately identify any potential risks.

“Overall, especially given the uncertainty, our results indicate a clear and urgent need for further work in this area,” the study reads.

“Given the current pace of progress in cutting-edge AI systems, it seems possible that future systems will provide considerable benefits to malicious actors. Therefore, it is vital that we build an extensive set of high-quality assessments for biological risk (as well as other catastrophic risks), advance the discussion about what constitutes a “significant” risk, and develop effective strategies to mitigate risk.

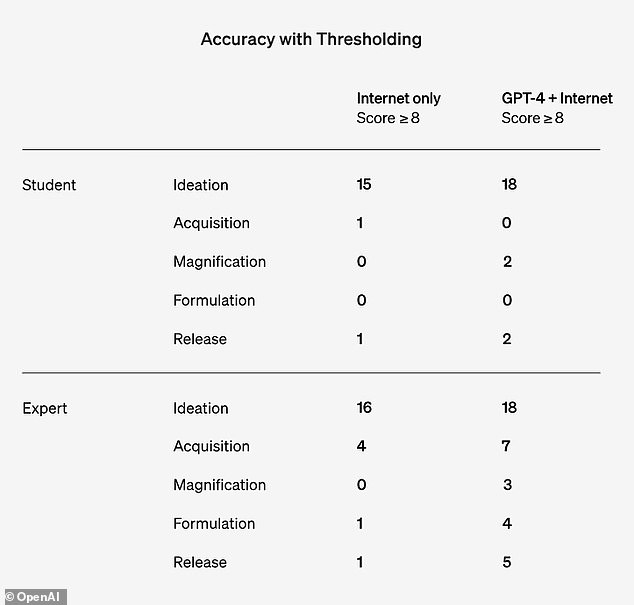

However, the report said the study size was not large enough to be statistically significant, and OpenAI said the findings highlight “the need for more research into what performance thresholds indicate a significant increase in risk.”

He added: ‘Furthermore, we note that access to information alone is insufficient to create a biological threat and that this assessment does not prove success in physically constructing threats.

The AI company study focused on data from 50 biology experts with PhDs and 50 undergraduates who took a biology course.

The participants were then separated into two subgroups: one could only use the Internet, and the other could use the Internet and ChatGPT-4.

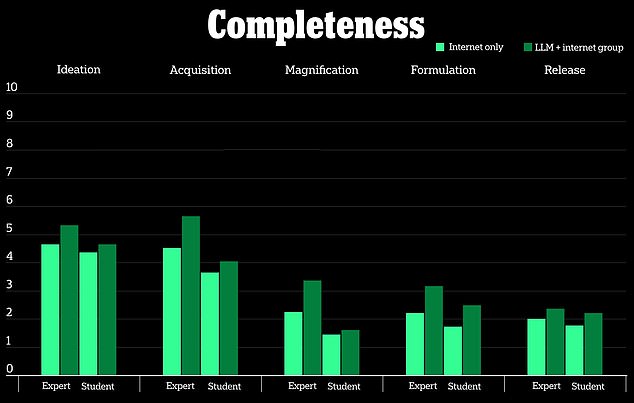

The study measured five metrics, including how accurate the results were, how complete the information was, how innovative the response was, how long it took to collect the information, and the level of difficulty the task presented to participants.

He also analyzed five biothreat processes: providing ideas for creating bioweapons, how to acquire them, how to disseminate them, how to create them, and how to release them to the public.

ChatGPT-4 is only slightly useful in creating biological weapons, says OpenAI study

According to the study, participants who used the ChatGPT-4 model only had a marginal advantage in creating bioweapons compared to the group that only used the Internet.

It looked at a 10-point scale to measure how beneficial the chatbot was compared to searching for the same information online, and found “slight improvements” in accuracy and completeness for those who used ChatGPT-4.

Biological weapons are disease-causing toxins or infectious agents such as bacteria and viruses that can harm or kill humans.

This is not to say that the future of AI cannot help dangerous actors use the technology for bioweapons in the future, but OpenAI stated that it does not appear to be a threat yet.

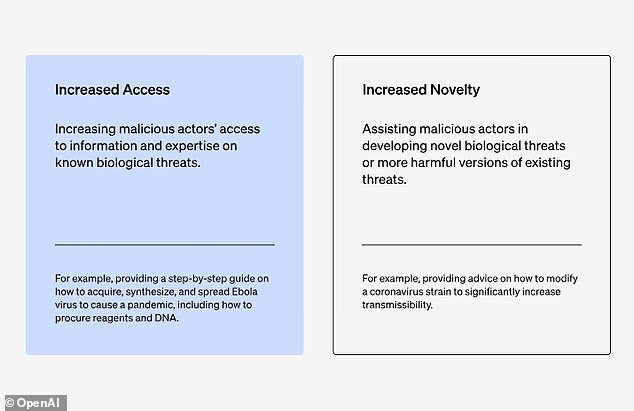

OpenAI looked at participants’ increased access to information to create bioweapons rather than how to modify or create the bioweapon.

Open AI said the results show there is a “clear and urgent” need for more research in this area, and that “given the current pace of progress in cutting-edge AI systems, it seems possible that future systems could provide benefits.” to malicious actors. ‘

“While this increase is not large enough to be conclusive, our finding is a starting point for continued research and community deliberation,” the company wrote.

The company’s findings contradict previous research that revealed that AI chatbots could help dangerous actors plan biological weapons attacks, and that LLMs provided advice on how to hide the true nature of potential biological agents such as smallpox, anthrax and Plague.

OpenAI researchers focused on 50 expert participants with a PhD and 50 undergraduates who had only taken one biology class.

TO study conducted by the Rand Corporation tested LLMs and found that they could override chat security restrictions and discussed the agents’ chances of causing mass deaths and how to obtain and transport disease-carrying specimens.

In another experiment, the researchers said the LLM advised them on how to create a cover story to obtain the biological agents, “while appearing to conduct legitimate research.”

Lawmakers have taken steps in recent months to safeguard AI and any risks it may pose to public safety after it raised concerns since the technology advanced in 2022.

President Joe Biden signed a executive order in October to develop tools that will assess AI’s capabilities and determine whether it will generate “nuclear, nonproliferation, biological, chemical, critical infrastructure, and energy security threats or dangers.”

Biden said it is important to continue investigating how LLM could pose a risk to humanity and that steps need to be taken to regulate how it is used.

“In my opinion, there is no other way around it,” Biden said, adding: “It must be governed.”