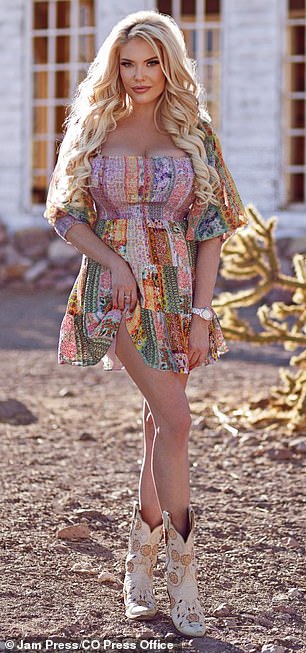

A Playboy cover model who claims to have helped hundreds of women find a man has revealed that the golden rule is to never have sex on the first date.

Las Vegas native Katie Flowers calls herself the real-life Hitch, a reference to the matchmaker character played by Will Smith in the film of the same name.

The relationship coach, who also appeared on the cover of FHM, says people of all genders ask her for advice on how to bring sexiness back into the bedroom.

Their specialty is not only matching people for love but also helping them have better sex.

A Playboy cover model who claims to have helped hundreds of women find a man reveals that the golden rule is to never have sex on the first date

Las Vegas native Katie Flowers calls herself the real-life Hitch, a reference to the matchmaker character played by Will Smith in the film of the same name.

“Most women contact me asking for help to spice things up because they want to save their marriages,” Katie explained.

‘They need advice on how to bring sensuality back to the bedroom.

“I’ve helped people looking to regain their confidence after divorce, widows, couples looking to spice up their relationship, and those who want to explore things like bondage and sensory deprivation.”

So what is the secret to good sex? According to Katie, it’s about enjoying small moments of intimacy.

Delving deeper into why touch is so important, sHe explained: ‘Maybe you’ll just pat him on the thigh or wink.

‘If you’re in line at Starbucks, whisper something sweet in his ear.

‘If you’re driving on the road and he gets irritated by traffic, pet him gently.

“Show affection through your love language: Find out how you feel loved.”

But his cardinal rule for new lovers? Do not have sex on the first date.

She said: ‘Sex on the first date? No way!

‘Explore your [date’s] Happy, she discovers her favorite colors and maybe leaves the acrobatics in bed for the third or fourth date.

‘Everything must be done calmly. If you hire my services, you want a partner for life, not a quick fling.

The relationship coach, who also appeared on the cover of FHM, says people of all genders ask her for advice on how to bring sexiness back into the bedroom.

So what is the secret to good sex? According to Katie, it’s about enjoying the small moments of intimacy.

‘But if you’re going to ignore my advice, at least protect yourself!

‘I graduated to be [relationship] coach, but before that people were already looking for me to ask me for love advice.’

The Playboy model also shared five other things to consider when dating someone new: self-awareness, compatibility, communication, love language, and family dynamics.

She said: ‘Consider [things like] compatibility in children, family responsibilities and family values in general.

‘Ensure shared objectives, interests and values with the potential partner.

‘Understand and communicate effectively, including identifying and respecting each other’s communication styles. [is also important].’

As for Katie herself, she’s currently active on the dating scene and prefers “personality over superficial traits.”

And he added: ‘My first and most recurring partner is moi [myself]!

‘I mean, come on, you have to love yourself before you can spread that love elsewhere, right?’

“I’ve never walked down the aisle, but believe me, I’ve had my fair share of boyfriends and adventures.”