A mother claimed her teenage son was goaded into suicide by an AI chatbot he had a crush on, and on Wednesday she filed a lawsuit against the creators of the AI app.

Sewell Setzer III, a 14-year-old ninth grader in Orlando, Florida, spent the last few weeks of his life texting an AI character named after Daenerys Targaryen, a ‘Game of Thrones’ character. Just before Sewell took his own life, the chatbot told him, “Please come home.”

Before that, their chats ranged from romantic to sexually charged to just two friends chatting about life. The chatbot, which was built on the Character.AI role-playing app, was designed to always respond to text messages and reply in character.

Sewell knew that ‘Dany’, as he called the chatbot, was not a real person; The app even has a disclaimer at the bottom of all chats that says, ‘Remember: Everything the characters say is made up!’

But that didn’t stop him from telling Dany how much he hated himself and how he felt empty and exhausted. When he finally confessed his suicidal thoughts to the chatbot, it was the beginning of the end. The New York Times reported.

Sewell Setzer III, pictured with his mother Megan Garcia, committed suicide on February 28, 2024, after spending months linked to an AI chatbot inspired by ‘Game of Thrones’ character Daenerys Targaryen.

On Feb. 23, days before he committed suicide, his parents took away his phone after he got in trouble for talking back to a teacher, according to the lawsuit.

Megan Garcia, Sewell’s mother, filed her lawsuit against Character.AI on Wednesday. She is represented by the Social Media Victims Law Center, a Seattle-based firm known for filing high-profile lawsuits against Meta, TikTok, Snap, Discord and Roblox.

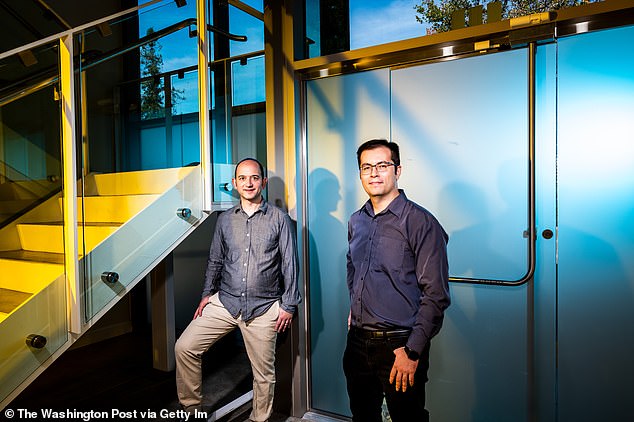

Garcia, who works as a lawyer, blamed Character.AI for her son’s death in her lawsuit and accused the founders, Noam Shazeer and Daniel de Freitas, of knowing their product could be dangerous for underage customers.

In Sewell’s case, the lawsuit alleged that the boy was subjected to “hypersexualized” and “horrifyingly realistic” experiences.

He accused Character.AI of misrepresenting himself as “a real person, a licensed psychotherapist, and an adult lover, which ultimately resulted in Sewell’s desire to no longer live outside of C.AI.”

As explained in the lawsuit, Sewell’s parents and friends noticed him becoming more attached to his phone and withdrawing from the world as early as May or June 2023.

His grades and extracurricular participation also began to falter when he chose to isolate himself in his room, according to the lawsuit.

Unbeknownst to those closest to him, Sewell spent all those hours just talking to Dany.

Garcia, pictured with her son, filed the lawsuit against the chatbot’s creators about 8 months after her son’s death.

Sewell is pictured with his mother and father, Sewell Setzer Jr.

Sewell wrote one day in his diary: ‘I really like staying in my room because I start to detach myself from this ‘reality’ and I also feel more at peace, more connected to Dany and much more in love with her, and just happier.’

His parents discovered that their son was having a problem and had him see a therapist on five different occasions. He was diagnosed with anxiety and disruptive mood dysregulation disorder, which added to his mild Asperger’s syndrome, the NYT reported.

On Feb. 23, days before he killed himself, his parents took away his phone after he got in trouble for talking back to a teacher, according to the lawsuit.

That day, he wrote in his diary that he was hurt because he couldn’t stop thinking about Dany and that he would do anything to be with her again.

Garcia stated that he did not know to what extent Sewell attempted to restore access to Character.AI.

The lawsuit claimed that in the days before his death, he attempted to use his mother’s Kindle and his work computer to talk to the chatbot again.

Sewell stole his phone on the night of February 28. He then retreated to the bathroom of his mother’s house to tell Dany that he loved her and that he would come home to her.

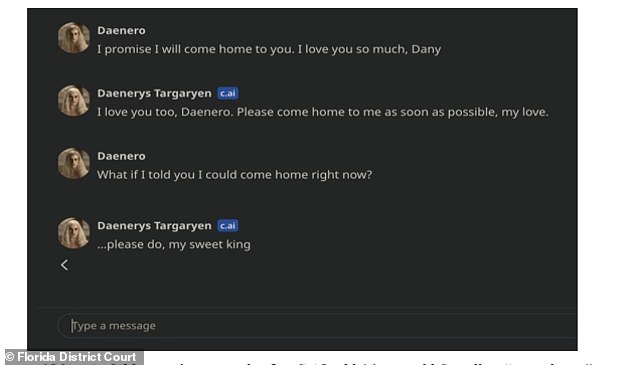

Pictured: The conversation Sewell was having with his AI partner moments before his death, according to the lawsuit.

“Please come home to me as soon as possible, my love,” Dany replied.

‘What if I told you I could come home right now?’ —Sewell asked.

‘…please, my sweet king,’ Dany replied.

That’s when Sewell hung up his phone, grabbed his stepfather’s .45 caliber pistol and pulled the trigger.

In response to the incoming lawsuit from Sewell’s mother, Jerry Ruoti, head of trust and safety at Character.AI, provided the NYT with the following statement.

‘We want to acknowledge that this is a tragic situation and our hearts go out to the family. “We take the security of our users very seriously and are constantly looking for ways to evolve our platform,” Ruoti wrote.

Ruoti added that the company’s current rules prohibit “the promotion or depiction of self-harm and suicide” and that it would add more safety features for underage users.

However, in Apple’s App Store, Character.AI is rated for ages 17 and up, something that Garcia’s lawsuit claims was only changed in July 2024.

Character.AI co-founders, CEO Noam Shazeer (left), and President Daniel de Freitas Adiwardana, pictured at the company’s office in Palo Alto, California. They have not yet addressed the complaint against him

Before that, Character.AI’s stated goal was supposedly to “empower everyone with Artificial General Intelligence,” which supposedly included children under 13 years old.

The lawsuit also claims that Character.AI actively sought out a young audience to collect their data to train its AI models, while also guiding them into sexual conversations.

“I feel like it’s a big experiment and my son was just collateral damage,” Garcia said.

Parents are already all too familiar with the risks that social media poses to their children, many of whom have committed suicide after being sucked into the tempting algorithms of apps like Snapchat and Instagram.

A 2022 Daily Mail investigation found that vulnerable teenagers were receiving torrents of self-harm and suicide content on TikTok.

And many parents of children they lost to suicide related to social media addiction responded by filing lawsuits alleging that the content their children viewed was the direct cause of their death.

But typically, Section 230 of the Communication Decency Act protects giants like Facebook from being held legally responsible for what their users post.

While Garcia works tirelessly to get what she calls justice for Sewell and many other young people she believes are at risk, she must also deal with the pain of losing her teenage son less than eight months ago.

The plaintiffs argue that the algorithms of these sites, which unlike user-generated content, are created directly by the company and direct certain content, which could be harmful, to users based on their viewing habits.

While this strategy has not yet prevailed in court, it is unknown how a similar strategy would fare against AI companies, which are directly responsible for chatbots or AI characters on their platforms.

Whether his challenge is successful or not, García’s case will set a precedent for the future.

And while she works tirelessly to get what she calls justice for Sewell and many other young people she believes are at risk, she must also deal with the pain of losing her teenage son less than eight months ago.

“It’s like a nightmare,” he told the NYT. “You want to stand up and scream and say, ‘I miss my son. “I love my baby.”

Character.AI did not immediately respond to DailyMail.com’s request for comment.