United Nations chief Antonio Guterres has declared that the Middle East is “on the brink” and that those living in the region face “a real danger of a devastating, large-scale conflict.”

Secretary-General Antonio Guterres told the emergency meeting, called after the Iran attack: “Regional – and indeed global – peace and security are being undermined by the hour.” Neither the region nor the world can afford more wars.”

Guterres said retaliatory acts involving the use of force are prohibited under international law after Iran’s attack on Israel, while the United States warned the Security Council that it would work to hold Tehran accountable at the UN.

Iran’s ambassador insisted that the attack was “in response to the Israeli regime’s military aggression,” highlighting the April 1 attack on the Iranian embassy building in Damascus, in which seven IRGC officers were killed.

Amir Saeid Iravani said Iran’s armed forces launched last night’s attacks in “self-defense” and that they were “necessary and proportionate, they were precise and they only targeted military targets.”

Israel’s ambassador to the UN, Gilad Erdan, requested on Saturday that the council hold the meeting. He showed a video of missiles landing on the Al Aqsa Mosque in Jerusalem while highlighting the scale of the attack during his speech.

United Nations Secretary-General Antonio Guterres (L) delivers the keynote address during a UN Security Council meeting on the situation in the Middle East.

Iran’s ambassador to the United Nations, Amir Saeid Iravani, attends a meeting on the situation in the Middle East

Israel’s ambassador to the UN, Gilad Erdan, shows a video during a meeting of the United Nations Security Council on the situation in the Middle East

“This attack crossed all red lines and Israel reserves the right to retaliate,” Erdan said. ‘We are not a frog in boiling water, we are a nation of lions.

‘After such a massive and direct attack against Israel, the entire world, much less Israel, cannot settle for inaction. We will defend our future.”

He called for more sanctions against Iran and its recognition as a terrorist state, saying: “For the sake of the world, Iran must be stopped today.”

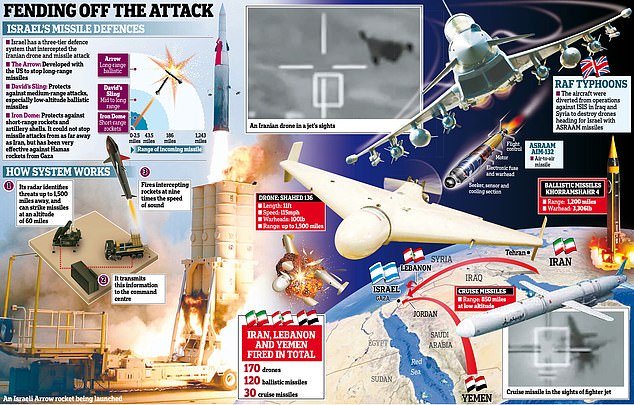

He highlighted the success of Israel’s Iron Dome and allied efforts to stop the 350 missiles he said were launched by Iran at Israel, and that the joint response helped reduce them by “99 percent.”

“The Iranian attack is a serious threat to global peace and security and I hope that the Council will use all means to take concrete measures against Iran,” Erdan wrote in a post on X before the meeting.

Secretary-General Antonio Guterres tonight reminded the council that retaliatory acts involving the use of force are prohibited under international law, adding that now is “the time to defuse and de-escalate,” and warned against further escalation. .

Drones or missiles compete for targets at undisclosed locations in northern Israel on April 14.

He also called for an immediate humanitarian ceasefire in Gaza after more than six months of fighting, the unconditional release of all hostages and the unhindered delivery of humanitarian aid to Gaza as it faces famine.

Meanwhile, the United States warned the Security Council that it would work to hold Tehran accountable to the UN.

U.S. Deputy Ambassador to the U.N. Robert Wood called on the 15-member body to unequivocally condemn Iran’s attack, saying the Security Council has an obligation not to let Iran’s actions go unanswered.

“In the coming days, and in consultation with other member states, the United States will explore additional measures to hold Iran accountable here at the United Nations,” he said, without specifying what action the United States would take.

A G7 meeting was held this afternoon in which leaders condemned Iran’s airstrike against Israel “in the strongest terms.”

“Let me be clear: If Iran or its proxies take actions against the United States or further actions against Israel, Iran will be held responsible,” he said, adding that the United States took note of Guterres’ comments and that Washington’s actions have been defensive. . .

Tehran, which had vowed retaliation for what it called an Israeli attack on a building next to its consulate in Damascus on April 1 that killed seven of its officers, said its attack was punishment for “Israeli crimes.”

Israel has neither confirmed nor denied responsibility for the consulate attack.